Join us

@faun ・ May 09,2024

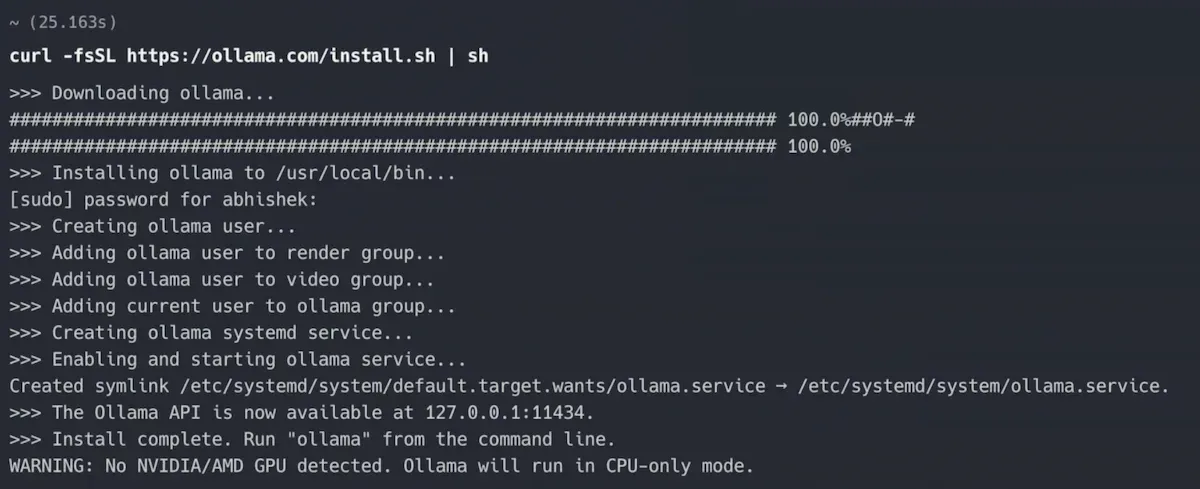

By following the provided tutorial, users can easily set up and run different open-source LLMs on their system. This tool streamlines the process of model configuration, dataset control, and model file management in a single package.

Join other developers and claim your FAUN.dev account now!