Importing packages in Python Notebook.

Congratulations on completing your Part 1 series of this journey! What? You didn’t go through Part 1 yet? Worry not, Here’s the link, Quickly go through to install necessary packages to proceed through this article, it will take not more than 5 mins:

How to do Web Scraping with Python? | Part 1

Wow! You came back so early! :)

I know you were never gone :p

Anyways, Let’s start by importing packages in Python Notebook now!

Why use a notebook and not a plain python script?

Because it gives much flexibility over python script and you can observe your output anytime and anywhere!

I usually prefer this and It is my personal opinion, but you are free to use anything. It is pure python code and it will work everywhere!

This part contains:

Requesting and Beautifying HTML data over the internet from your Python Script.

Scrapping friendly website used: https://example.com

All notebooks are available on my GitHub repository! Check them out if you feel stuck anywhere! :)

GitHub - developergaurav-exe/web-scraping-in-python: Web Scraping in Python through BeautifulSoup…

More Scraping friendly sites are:

1. Obtain HTML data over the internet

Example.com website landing page:

Check if you don’t get any firewall issues or errors while running below statements. Disabling firewall can do the trick if your firewall blocked this request.

Quickly generating get request on the Scrapping friendly website which is example.com.

If your code ran smoothly, Congratulations! Let’s see what is stored in result! :)

This need to be beautified!

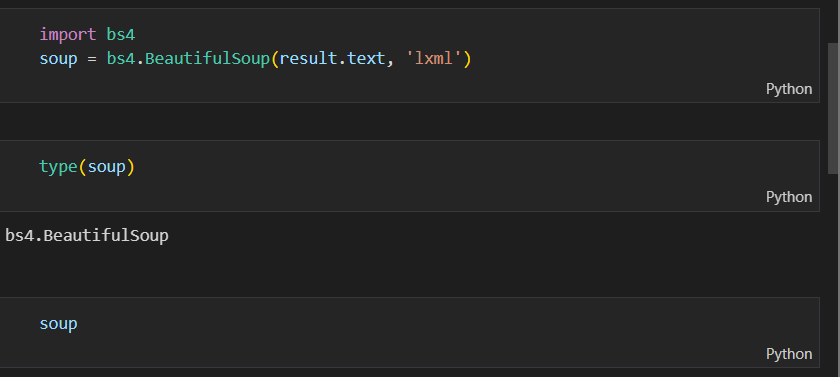

Our newly installed bs4 package a.k.a Beautiful Soup and lxml package will now come into the Picture!

Creating Soup with the obtained Ingredients

It will be yummy I guarantee you! :)

BeautifulSoup is a class in bs4 package and we are creating soup object of this class by providing it with two values, result.text (Remember this was our Ugly looking non readable HTML text).

Sorry ‘result.text’, but that was the truth, I cannot understand you.

So we provide it with lxml, a beautifying engine required to beautify HTML data. This is given as string while making soup object of BeautifulSoup class.

Now, this is what soup looks like!

Looks yummy, Right? :)

If you have performed everything till now, and it all worked fine, Then Congratulations! You can now successfully request and beautify HTML data over the internet from your Python Script!

Following up next with Grabbing Elements from HTML data, to scrape our required information:

How to do Web Scraping in Python? | Part 3 (Finale)

This part contains core Web Scraping, So don’t miss your chance to learn something new! :)

~Follow Harsh Gaurav for more Technical and Interestingly random content :)

Start blogging about your favorite technologies, reach more readers and earn rewards!

Join other developers and claim your FAUN account now!

Harsh Gaurav

@developergaurav-exeUser Popularity

27

Influence

3k

Total Hits

3

Posts

Only registered users can post comments. Please, login or signup.