Simple way to give your S3 bucket a fresh start when it is too filled with useless files.

Simple way to give your S3 bucket a fresh start when it is too filled with useless files.

It is a bit difficult emptying an AWS S3 bucket, these are the easiest ways to do so (In my opinion).

AWS S3: Lifecycle Policy

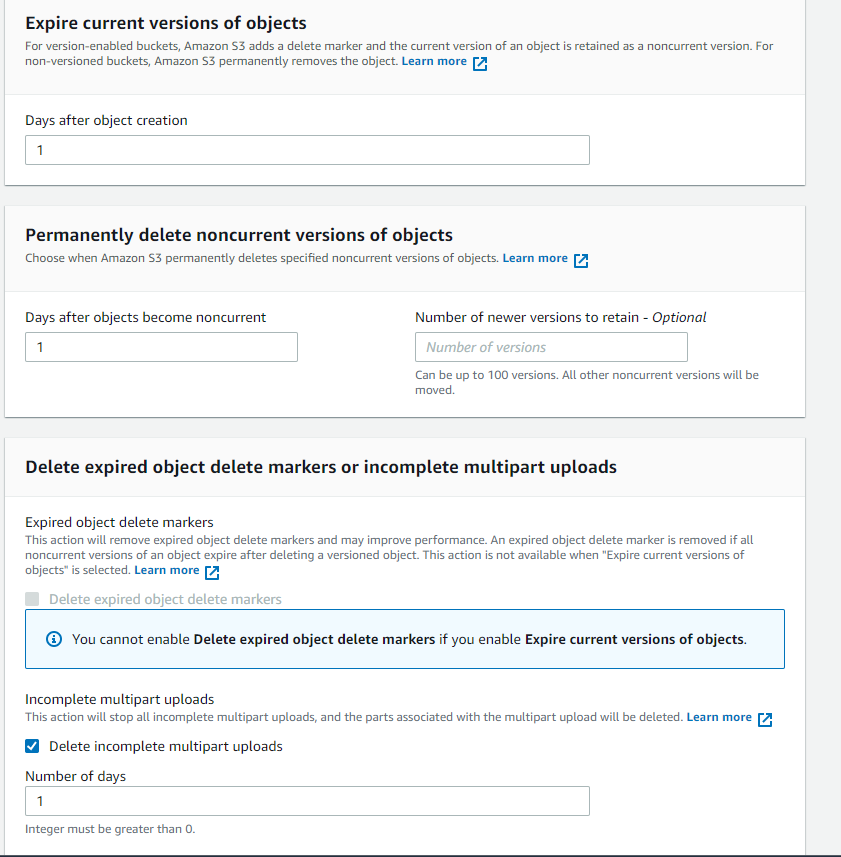

If you are a patient soul (unlike me), then I’d suggest you create a lifecycle policy like the following:

Tried it out and it deleted all of my files in 24 hrs (not the most effective method though).

AWS Lambda

Create an AWS Lambda function with the following python code which will iterate through the AWS S3 bucket and its folders to then delete its content:

Note: Must include the following permissions to your AWS Lambda execution role.

AWS CLI

The following command would probably the easiest method out of all the previous options:

Conclusion

Simple, but effective ways to give your AWS S3 buckets a fresh start. Especially if you’re like me and leave the clutter for way too long.

Hope this was helpful. If you have other recommendations, please leave a comment.

As always, Thank you and Gracias!!!

Start blogging about your favorite technologies, reach more readers and earn rewards!

Join other developers and claim your FAUN account now!

User Popularity

104

Influence

10k

Total Hits

6

Posts

Only registered users can post comments. Please, login or signup.