In Part 1 of Argo Rollouts, we have seen what Progressive Delivery is and how you can achieve the Blue-Green deployment type using Argo Rollouts. We also deployed a sample app in a Kubernetes cluster using it. Do read the first part of this Progressive Delivery blog series, if you haven’t yet.

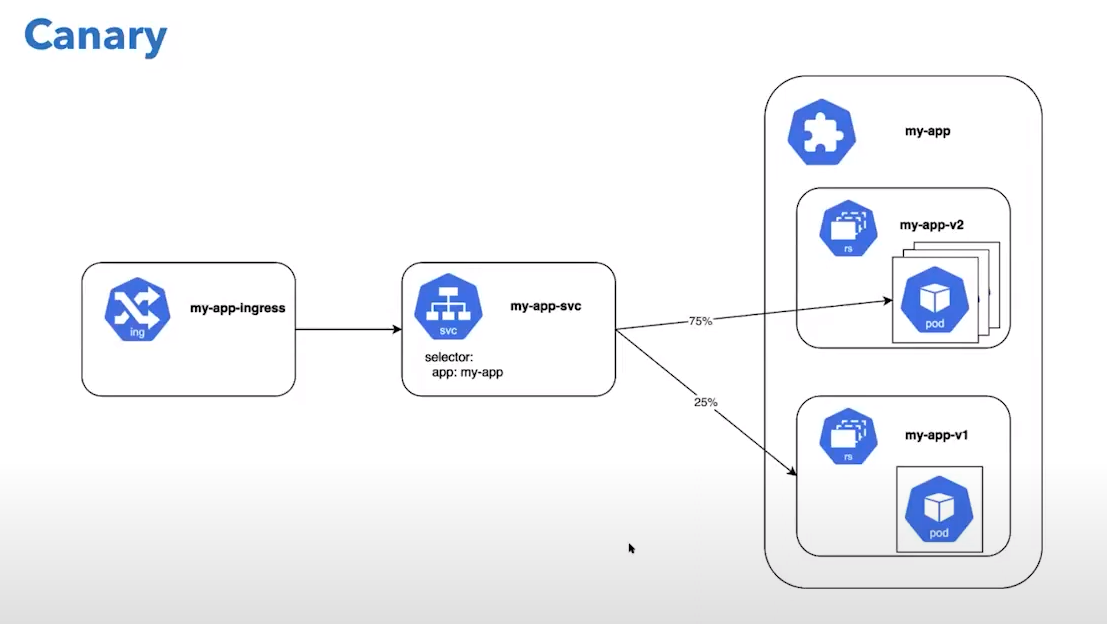

In this hands-on article, we will explore what is the canary deployment strategy and how you can achieve the same using Argo Rollouts. But before that, let’s first understand what is canary deployment and the need behind it.

What is Canary Deployment?

As stated rightly by Danilo Sato in this CanaryRelease article,

“Canary release is a technique to reduce the risk of introducing a new software version in production by slowly rolling out the change to a small subset of users before rolling it out to the entire infrastructure and making it available to everybody.”

Canary is one of the most popular and widely adopted techniques of progressive delivery. Do you know why we call it canary and not anything else? The term “canary deployment” comes from an old coal mining technique. These mines often contained carbon monoxide and other dangerous gases that could kill the miners. Canary birds were more sensitive to airborne toxins than humans, so miners would use them as early detectors, So Similar approach is used in canary deployment, where instead of putting entire end-users in danger like in old big-bang deployment, we instead start releasing our new version of the application to a very small percentage of users and then try to do analysis and see if all working as expected and then gradually release it to a larger audience in an incremental way.