This article is written for part of the Software Engineering Project course in University of Indonesia. Any information put here may or may not be complete and should not be used as your only guideline.

Building applications have always been a hassle. You’d be happy that your application runs. All of your unit tests work. All your code compiles. Only to find that other guy complaining that your application doesn’t run in his laptop. Everybody is arguing that none of it is each’s fault. Better yet, your Project Manager complains that the time is running out for you to figure out why it doesn’t work, while theoretically it should.

There are many checklists and things to consider: Library versions, Application versions, OS versions, Kernel versions. If you take a look at it, almost every frustrations inside building an application are based on versioning. Past technologies exists to tackle this, with the most archaic way being just bringing your laptop to your colleague. However, you find yourself hearing this Containers on Docker that supposed to change your life 180 degrees; Well, what is it?

Docker, The Savior to Our Deadlines

Docker provides the ability to package and run an application in a loosely isolated environment called a container. The isolation and security allow you to run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you do not need to rely on what is currently installed on the host. You can easily share containers while you work, and be sure that everyone you share with gets the same container that works in the same way.

Docker provides tooling and a platform to manage the lifecycle of your containers:

- Develop your application and its supporting components using containers.

- The container becomes the unit for distributing and testing your application.

- When you’re ready, deploy your application into your production environment, as a container or an orchestrated service. This works the same everywhere.

Well, why use this rather than using a Virtual Machine (VM)?

While, yes, after our frustrations on bringing our laptop to our colleagues, VM’s are already mature enough to help us develop our applications. Virtual Machines work exactly as its name, they create separate individual machines on top of your laptop. They behave exactly as your laptop would be used. It can run any application that the OS would normally run. But, there’s a difference.

VM’s use a technology called Hipervisor, which enables multiple syscalls and OS’s to use your computer’s resources concurrently. Which means, your computer’s resources are virtually divided to run multiple computers at once. Furthermore, your computer’s resources are actually used to run multiple OS’s. Which means it runs multiple kernels, multiple file systems, and multiple process management. This costs a lot.

Docker’s use a newer technology called containers. However, to explain containers, we must first understand the underlying technology behind it: namespaces, and cgroups.

Namespaces, the Shadow Behind Dockers

Namespaces are a feature of the Linux kernel that partitions kernel resources such that one set of processes sees one set of resources while another set of processes sees a different set of resources.

In other words, the key feature of namespaces is that they isolate processes from each other. On a server where you are running many different services, isolating each service and its associated processes from other services means that there is a smaller blast radius for changes, as well as a smaller footprint for security‑related concerns. Mostly though, isolating services describes the architectural style of microservices as written by Martin Fowler.

Docker Engine uses the following namespaces on Linux:

- A user namespace has its own set of user IDs and group IDs for assignment to processes. In particular, this means that a process can have

rootprivilege within its user namespace without having it in other user namespaces. - A process ID (PID) namespace assigns a set of PIDs to processes that are independent from the set of PIDs in other namespaces. The first process created in a new namespace has PID 1 and child processes are assigned subsequent PIDs. If a child process is created with its own PID namespace, it has PID 1 in that namespace as well as its PID in the parent process’ namespace.

- A network namespace has an independent network stack: its own private routing table, set of IP addresses, socket listing, connection tracking table, firewall, and other network‑related resources.

- A mount namespace has an independent list of mount points seen by the processes in the namespace. This means that you can mount and unmount filesystems in a mount namespace without affecting the host filesystem.

- An interprocess communication (IPC) namespace has its own IPC resources, for example POSIX message queues.

- A UNIX Time‑Sharing (UTS) namespace allows a single system to appear to have different host and domain names to different processes.

Cgroups, the Manager

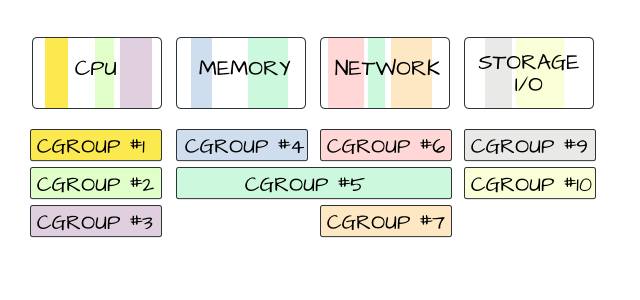

Docker also makes use of kernel control groups for resource allocation and isolation. A cgroup limits an application to a specific set of resources. Control groups allow Docker Engine to share available hardware resources to containers and optionally enforce limits and constraints.

As such, the notion that Dockers use “OS-virtualization” is wrong. The OS is not virtualized. Rather, every container inside your computer is just another process; just another namespace. Every syscall to the system’s kernel refers to the OS itself, much like a typical application would. Every resource would be allocated just as a typical process would ask for a resource. All of this are enabled by the very container engine that Docker uses, which manages the namespaces that every Docker Image would have.

Well, sounds great! How do I sign up?

TL;DR Docker

Docker makes our lives easier. It comes with less complaints from the Project Manager, less arguing from our colleagues, all around useful. Without further ado, here is how you would use it.

A Dockerfile is a file that contains all your configuration to build an image. In a nutshell, its every step of your commands to setup a Linux environment. This Dockerfile then will be built, and can be executed inside a container. These containers then can communicate with each other via another layer of container called docker compose, which is configured by our YAML file.

With this, you can create microservices that will make your lecturer proud!

Dockerfile, our First Step!

This is a Dockerfile from one of my class’s project. There are several stages inside the script:

- The first stage, I used Gradle 6.9.0 as my base image of the build process.

- I copied the files from the host machine to the container. I then owned the files there as a subuser from the namespace.

- By then, it’s

gradle clean assembleas usual! - The second stage, I used a smaller base image from OpenJDK 8 with Alpine distro.

- After that, I only copied the

.jarfrom the container of first stage to the second stage. - Lastly, I specified the commands (CMD) that, when the image is run, the container automatically runs.

We would then actually build the application by using docker build -t app_name . . We can then start the application by using docker run app_name .

Getting Spicy, It’s Docker Compose!

I mentioned that containers can communicate with each other via docker compose. If we take a look at above, we can see that there are four containers that are run by the Docker:

backend-apiwhich is our Dockerfile from before.- MySQL

- Redis

- MongoDB

It turns out that our Dockerfile backend-api depends on those three services! If you see at the list of hosts in backend-api , you would see that it communicates via the hosts mysql , mongodb , and redis .

All of these are managed by the container engine that we described earlier. These container engine uses cgroup to actually manage the process and network namespace itself!

Docker, it’s magical, it’s wonderful, but it’s weird. In a sense, it’s basically running an application inside an application; a process inside a process. There’s never really any virtualization that exists in containers, which really blows your mind when everybody talks otherwise.

Now, you can be good friends with your colleagues and project managers because you use Docker.

Start blogging about your favorite technologies, reach more readers and earn rewards!

Join other developers and claim your FAUN account now!

User Popularity

70

Influence

7k

Total Hits

1

Posts

Only registered users can post comments. Please, login or signup.