We Needed Better Cloud Storage for Python so We Built Obstore

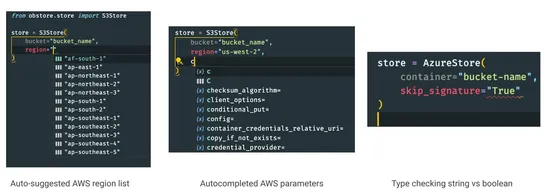

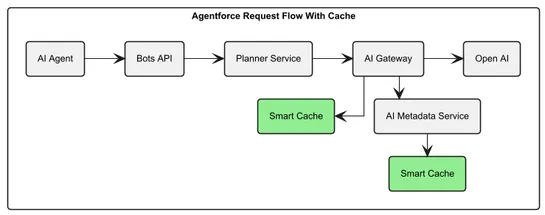

Obstoreis a new stateless object store that skips fsspec-style caching and keeps its API tight and predictable across S3, GCS, and Azure. Sync and async both work. Under the hood? Fast, zero-copy Rust–Python interop. And on small concurrent async GETs, it reportedly crushes S3FS with up to9x better .. read more