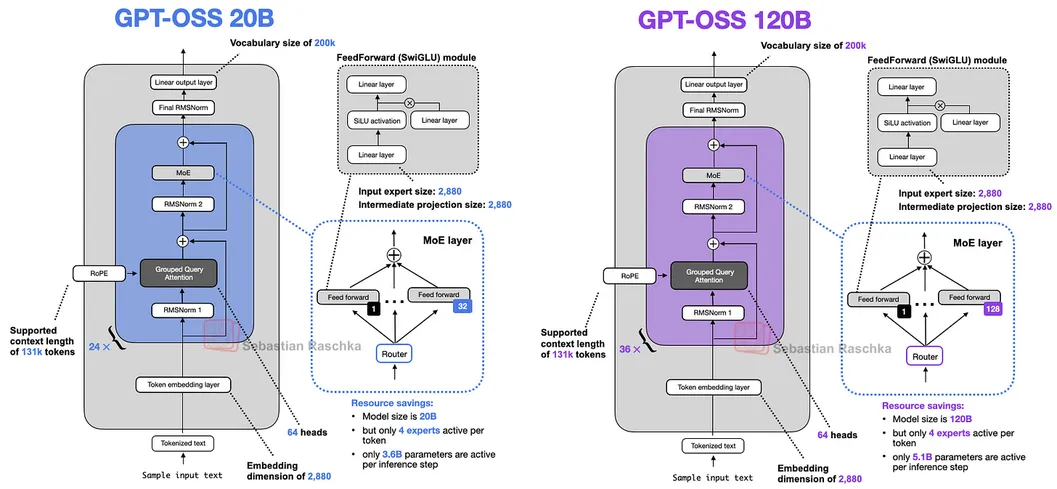

OpenAI Returns to Openness. The company dropped gpt-oss-20B and gpt-oss-120B—its first open-weight LLMs since GPT-2. The models pack a modern stack: Mixture-of-Experts, Grouped Query Attention, Sliding Window Attention, and SwiGLU.

They're also lean. Thanks to MXFP4 quantization, 20B runs on a 16GB consumer GPU, and 120B fits on a single H100 or MI300X.

Big picture: OpenAI’s finally shipping LLMs meant to run locally. It’s a move toward open weights, smaller stacks, and heavier bets on decentralized AI. About time.