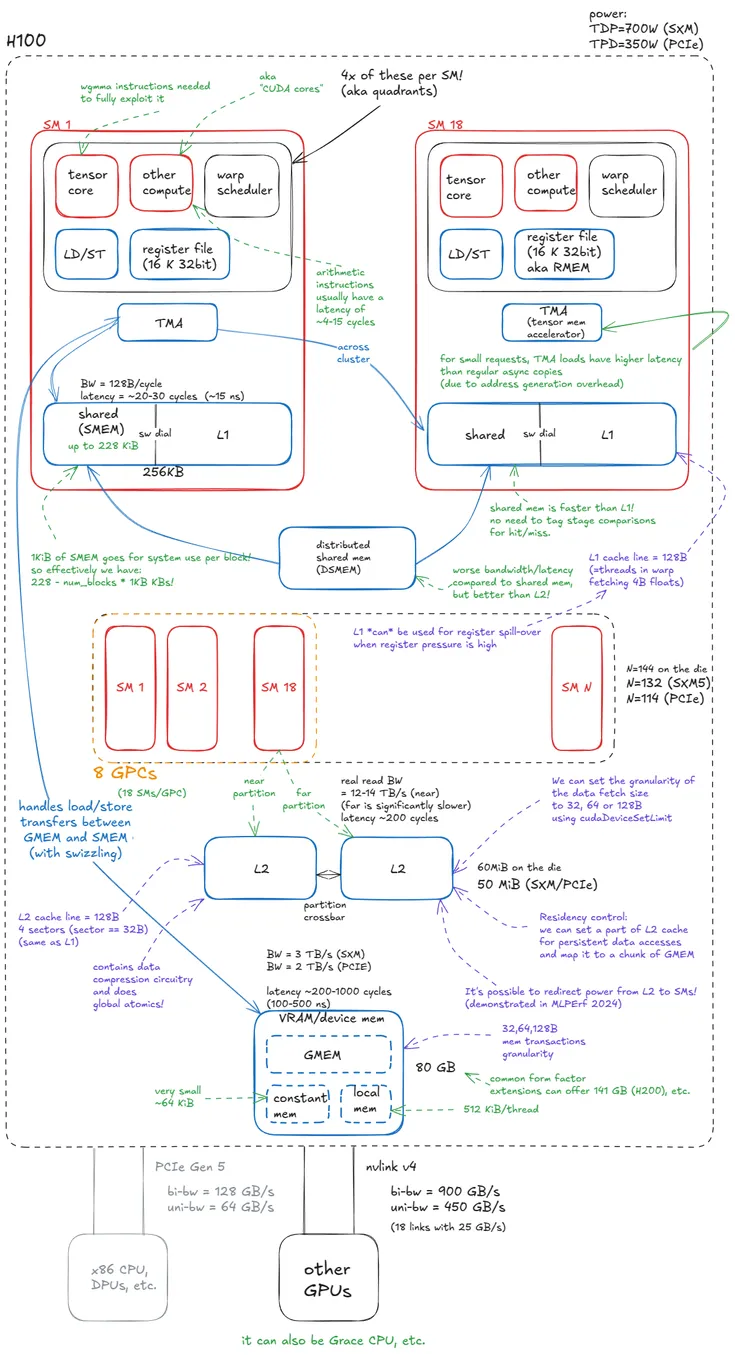

NVIDIA Hopper packs serious architectural tricks. At the core: **Tensor Memory Accelerator (TMA)**, **tensor cores**, and **swizzling**—the trio behind async, cache-friendly matmul kernels that flirt with peak throughput. But folks aren't stopping at cuBLAS. They're stacking new tactics: **warp-group MMA**, SMEM pipelining, **Hilbert curve scheduling**, and **cluster-wide data reuse**. All in plain CUDA C++ with a dusting of inline PTX. No magic libraries, just smart scheduling and brutal efficiency.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!