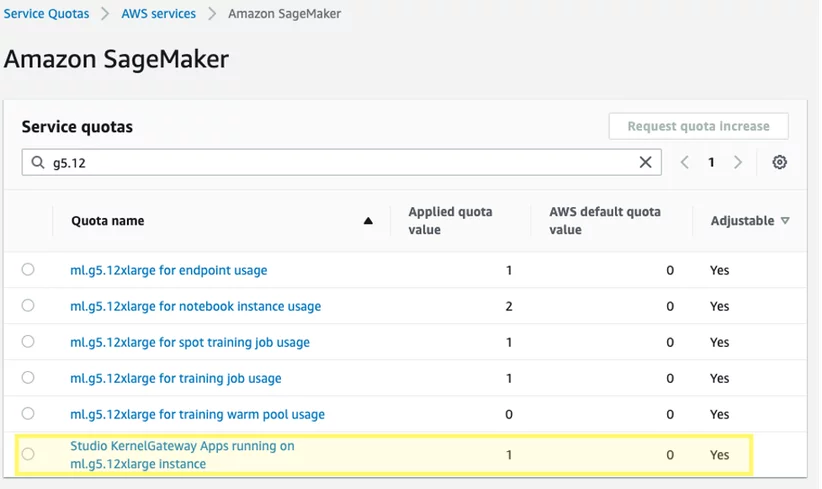

Fine-tuning large language models (LLMs) using Amazon SageMaker notebooks provides improved performance on domain-specific tasks. The use of Hugging Face's parameter-efficient fine-tuning (PEFT) library and quantization techniques through bitsandbytes allows for interactive fine-tuning of extremely large models using a single notebook instance, such as Falcon-40B on a ml.g5.12xlarge instance.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!