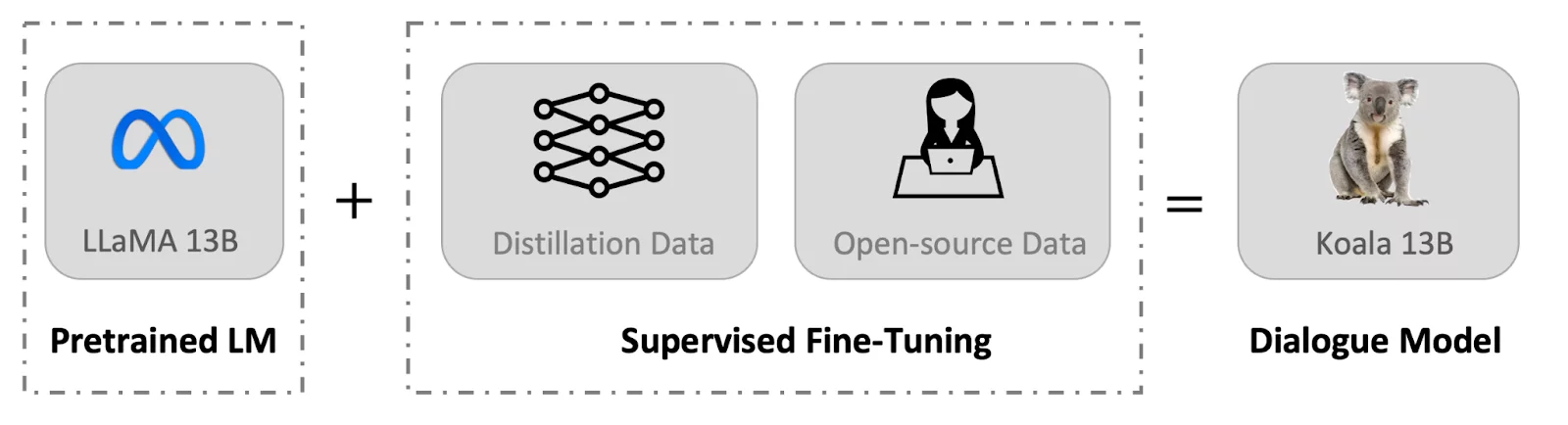

The blog post introduces Koala, a chatbot trained by fine-tuning Meta’s LLaMA on dialogue data gathered from the web.

- The model is evaluated against ChatGPT and Stanford’s Alpaca, showing competitive performance to existing models.

- The results suggest that models that are small enough to be run locally can capture much of the performance of their larger cousins if trained on carefully sourced data.

- The post emphasizes the need for curating high-quality datasets to enable safer, more factual, and more capable models.

- However, Koala has major shortcomings in terms of content, safety, and reliability, and should not be used outside of research.