The article discusses how language models like OpenAI's ChatGPT work and their strengths and weaknesses.

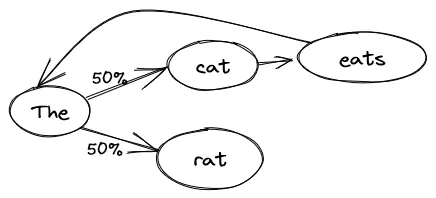

- These models use a probabilistic approach to generate text based on training data, and word embedding to understand the meaning of words.

- They can also learn relationships between words and take into account larger contexts.

- However, they have limitations in their ability to understand nonverbal communication, apply logical rules consistently, and perform mathematical calculations accurately.

- The article also explores the potential impact of language models on the job market and society, as well as the risks associated with their development.

- In order to use GPT productively, it is essential to apply it to tasks that are either fuzzy and error-tolerant (generate a marketing email?), or easily verifiable, either by a (non-AI!) program or by a human in the loop.