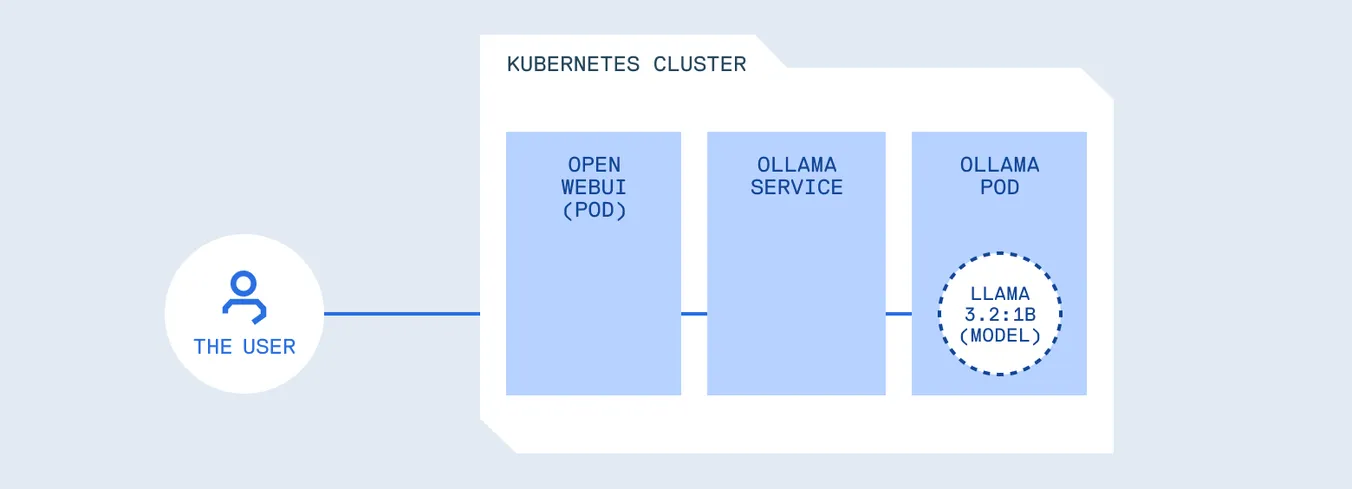

Running LLMs on Kubernetes opens up a new can of worms - stuff infra hardening won’t catch. You need a policy-smart gateway to vet inputs, lock down tool use, and whitelist models. No shortcuts.

This post drops a reference gateway build using mirrord (for fast, in-cluster tinkering) and Cloudsmith (to track and secure every last artifact)