From there the user can go to: https://www.ibm.com/products/ibm-watson-natural-language-processing and start using it!

TL;DR:

If your answer is yes, the IBM “Build Lab” team (the team I’m part of) provides an end-to-end solution which make it easy to deploy and use a fully operational NLP solution in just an hour. And this solution could be used right away. But before doing that, maybe some introduction of what this automation provides to our DevOps engineers/SREs (or any other curious tech savvy) public.

Preamble — What is the Watson NLP and why it could interest you?

The “Watson Natural Language Processing (NLP) Library for Embed” provides natural language processing functions for syntax analysis, and pre-trained models for a wide variety of text processing tasks, in a fully embeddable library.

The product page could be found here: https://www.ibm.com/partnerworld/program/embeddableai

Using Watson NLP, you can turn unstructured data into structured data, making the data easier to understand and transferable, in particular if you are working with a mix of unstructured and structured data. Examples of such data are call center records, customer complaints, social media posts, or problem reports. The unstructured data is often part of a larger data record which includes columns with structured data. Extracting meaning and structure from the unstructured data and combining this information with the data in the columns of structured data, gives you a deeper understanding of the input data and can help you to make better business decisions.

IBM Watson NLP For Embed delivers IBM’s state-of-the-art Watson NLP library wrapped in a container image with cloud APIs ready to serve NLP models at internet scale. Also included is access to an extensive catalog of pretrained models ready to run many NLP tasks.

The Watson NLP Runtime image contains generated proto-API files for all the Watson NLP tasks. These tasks are served by a gRPC runtime — a cross-platform, open source, high performance Remote Procedure Call framework with dynamic, multi-model support. The proto-API files and the gRPC runtime are wrapped in a REST gateway, for quick demos and ease of use.

Now that was very very long introduction on the embeddable AI thing, if you’re still reading this, we need to look into a bit of automation now! I am going to introduce another toolkit…

What is “Techzone Accelerator Toolkit”?

As an end-user (DevOps, SRE, developer…) of a specific technology, such as the module described above, nobody wants to spend time on the deployments side of the solution, but to focus on where the value really is. Deploying any kind of complexe solution is a big piece of work, and it becomes very much complex when the aim is to use it on Kubernetes based environments on different clouds or distributions (e.g.: IBM, Azure, Aws, Kind, Minikube…). A team of IBM experts has built a solution which provides an incredibly easy toolkit which could do this with very few efforts.

This toolkit is an opinionated open-source (yes, open-source) framework on top of the standard technologies Terraform and ArgoCD. The toolkit allows automating deployments and operations of infrastructure like Red Hat OpenShift clusters and applications (or other flavors of Kubernetes). The landing page of the tool is here: https://operate.cloudnativetoolkit.dev/

Currently there are 205 modules in GA or beta status ready to be deployed on a variety of K8S based platform.

OK, now let’s jump to the aim of this article to let you use the best of the breed to deploy an embeddable AI in a matter of minutes! ;p)

“Build Lab” team bring together the best of the two worlds!

In order to make it as easy and smooth as possible for an end-user (partner/customer/curious developer) to use the embeddable AI solution, our team built an automated solution which makes an end-to-end deployment in just about one hour and so the DevOps/SRE persona would be able to deploy the NLP solution in their preferred environments.

The asset we provide; “Automation for IBM Watson Deployments” could be accessed directly from this public repository: https://github.com/IBM/watson-automation

What does the Build Labs “Watson-Automation” asset do?

This asset is a ready-to-use solution to show how to deploy rapidly the NLP module in an environement and how to use it with the essential aim to remove the burden of a sophisticated deployment task and to focus on the final aim of using AI

The solution provided relies on the automation mechanism provided by the “Techzone accelerator toolkit” for the deployment part. The asset provides a “BOM” or bill of materials which describes the modules to be deployed.

The BOM makes it quite easy to assemble the bricks which should be parts of the solution to build.

There is a scenario or a happy path provided to deploy a simple Watson NLP on OpenShift as easily as possible. At the end of the happy path the following infrastructure, service and application components will have been deployed;

-A Managed OpenShift cluster on the IBM Cloud on VPC.

-ArgoCD for GitOps automation running in the OpenShift cluster.

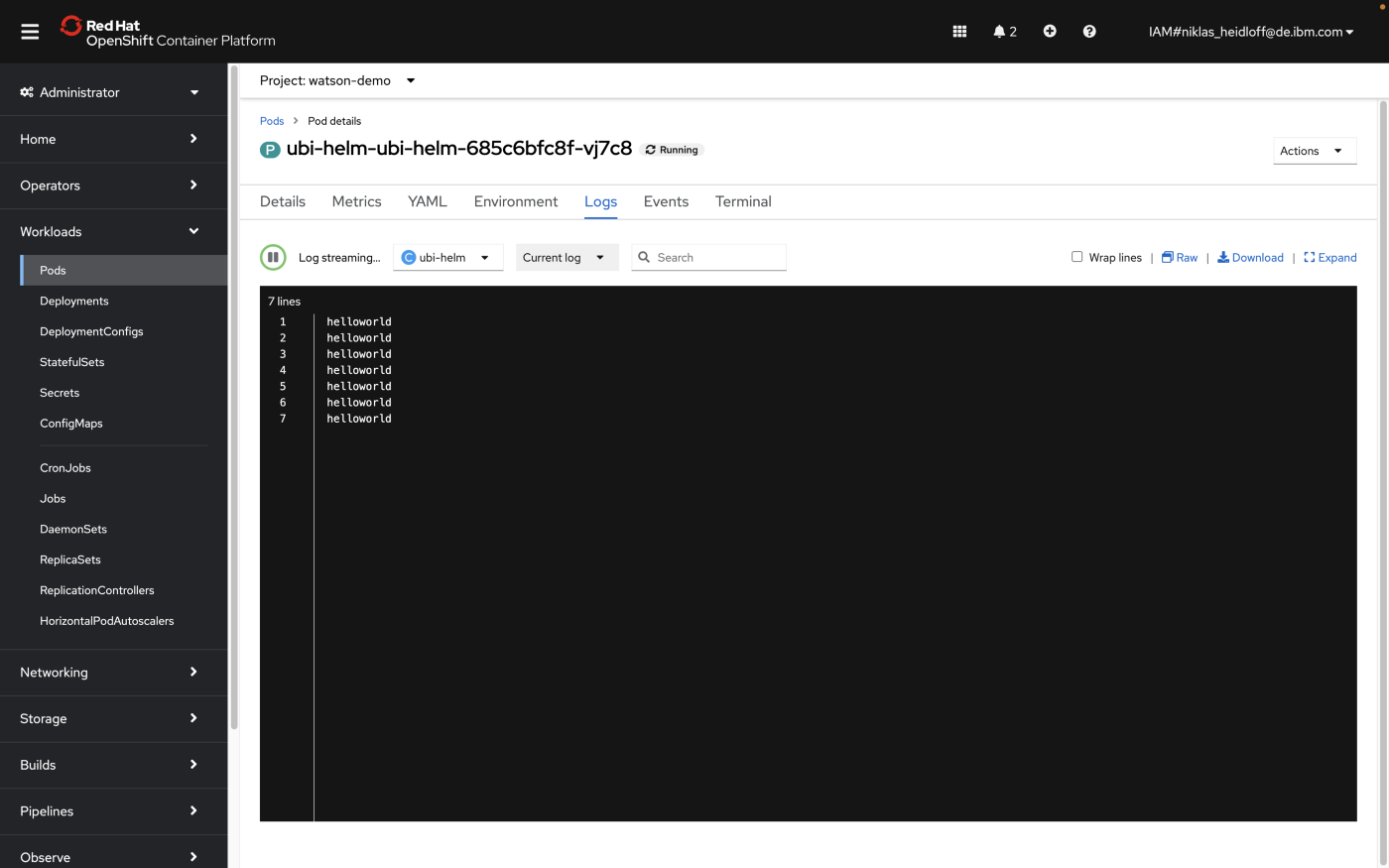

-A Watson NLP pod.

- Runtime container which provides gRPC and REST interfaces via a service

- One model container for syntax predictions.

A sample consumer application pod (to the NLP module).

- UBI container which is configured to run a command which invokes the Watson NLP runtime container via REST.

-Snippets to invoke Watson NLP via gRPC and REST from a local environment.

The Github repository provides also the instructions needed if you already have an existing cluster and just want to try the NLP against it.

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!

Developer Influence

36

Influence

4k

Total Hits

1

Posts