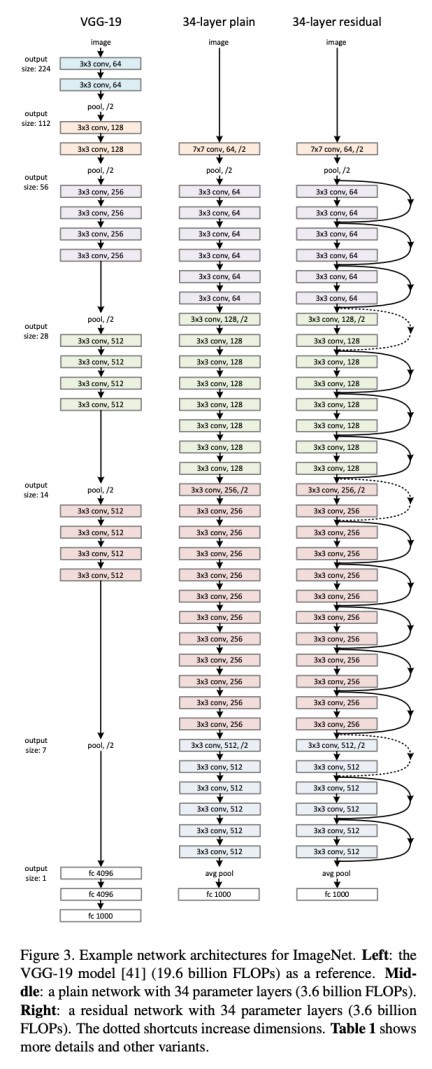

It is very important to understand the ResNet. The advancement in the computer vision task was due to the breakthrough achievement of the ResNet architecture.

The architecture allows you to go deeper into the layers which are 150+ layers.

It is an innovative neural network that was first introduced by Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun in their 2015 computer vision research paper titled ‘Deep Residual Learning for Image Recognition.

More Details about the paper can be found here Deep Residual Learning for Image Recognition

Before Resnet, In theory, the more you have layers the loss value reduces and accuracy increases, but in practically that did not happen. The more you have layers the accuracy was decreasing.

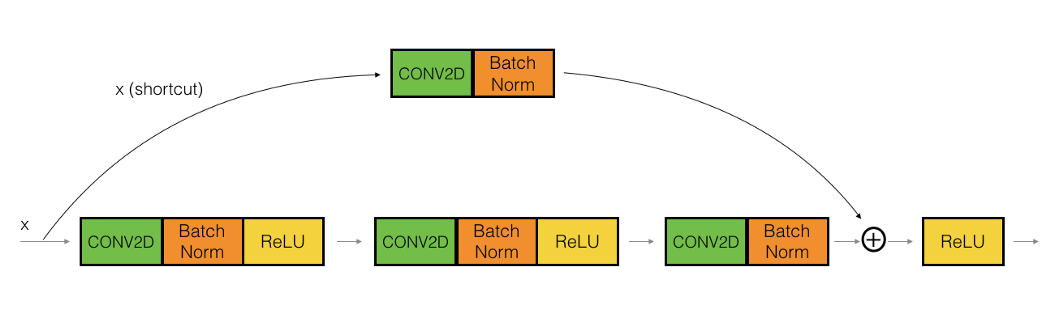

Convolutional Neural Network has the Problem of the “Vanishing Gradient Problem” During the Backpropagation the value of gradient descent decreases and there are hardly any changes in the weights. To overcome this problem Resnet Comes with Skip Connections.

Skip Connection — Adding the original input to the output of the convolutional block.

Basically, resnet has two parts One is an Identity block and the other one is a convolutional block.

7.1 Identity Block