The need of multi region cluster

Why do we really need to setup a multi region cluster ?

The simplest answer to this question is to make our application highly available and fault tolerant, so that if there is some issue with one region our application can still serve traffic using secondary cluster.

We sometimes also setup these multi region cluster to reduce latency by setting up the cluster close to the client’s location. This is more helpful if our clients are from multiple locations across the globe.

You might have heard about the term active-passive architecture, here we will do active-active architecture. Both primary and secondary cluster will serve traffic in this case (we are running the clusters anyway, might as well use it :D)

Use Case for this story

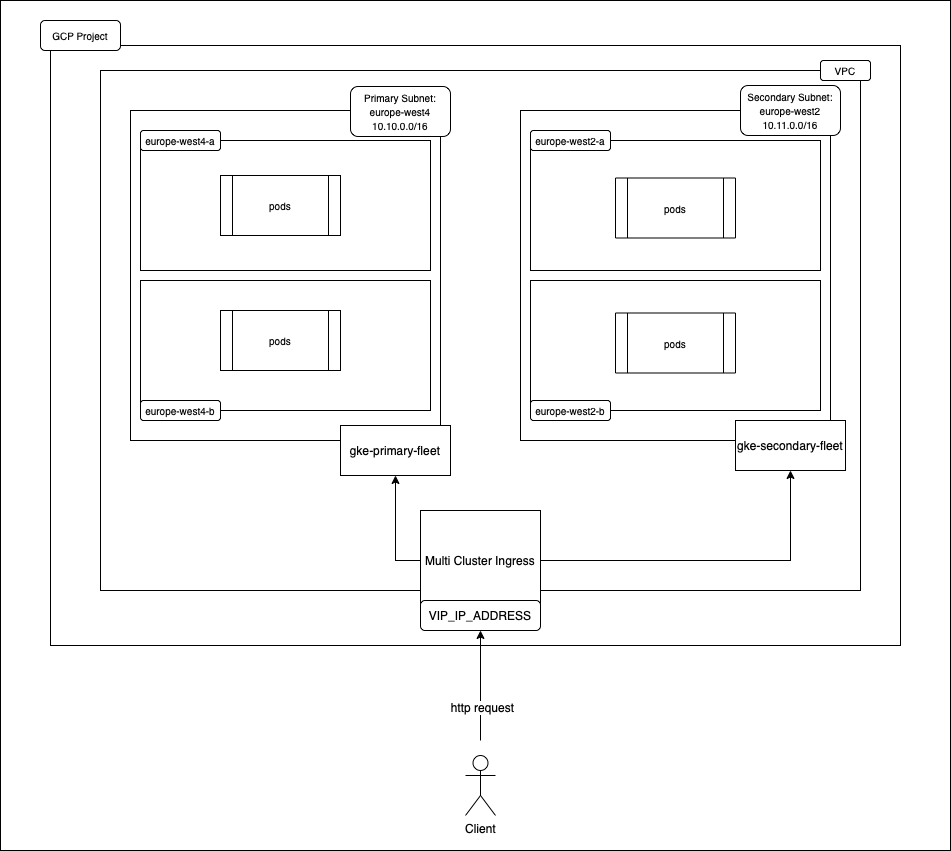

We will write a terraform script to create infrastructure on Google cloud platform mainly Google Kubernetes Engine cluster in two different regions in a way that we can use both of them at the same time, and then we will deploy a sample application on both the clusters.

I will share the github repository url at the end of the story.

Prerequisites

- GCP Account (Free Tier will also work)

- Terraform

- Python 3

- gcloud CLI

- kubectl

- IDE (VS Code or IntelliJ IDEA preferably)

Setup

GCP Account

Click here to go to google cloud platform and click on Get Started For Free button on the top right corner (it might change in future)

gcloud CLI

Click here to go to official gcloud installation guide page and install it as per your machine operating system. (Python 3 is a prerequisite for this)

Let’s now run following commands to configure gcloud cli.