Terraform Stacks: A Deep-Dive for Azure Practitioners in Europe

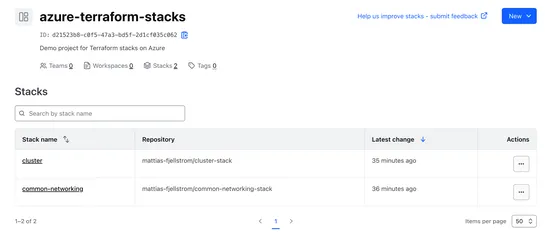

Terraform Stacksjust hit GA onHCP Terraform, and they bring some real structure to the chaos. Think modular, declarative, and way less workspace spaghetti. Build reusablecomponents(a.k.a. modules), bundle them intodeployments, and wire up stacks usingpublish/consume patterns- complete with automated.. read more