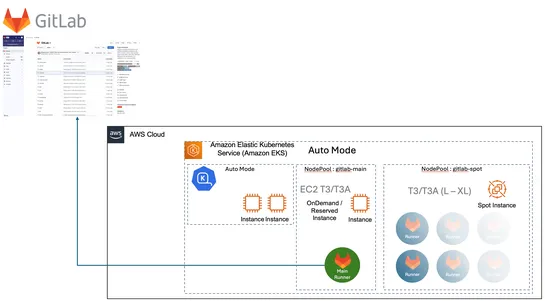

Streamline your containerized CI/CD with GitLab Runners and Amazon EKS Auto Mode

GitLab Runners now work withAmazon EKS Auto Mode. That means hands-off infra, smarter scaling, and built-in AWS security. Runners spin up onEC2 Spot Instances, so teams can cut CI/CD compute costs by as much as90%- without hacking together flaky pipelines... read more