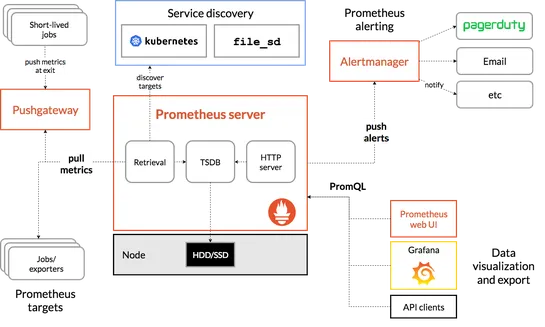

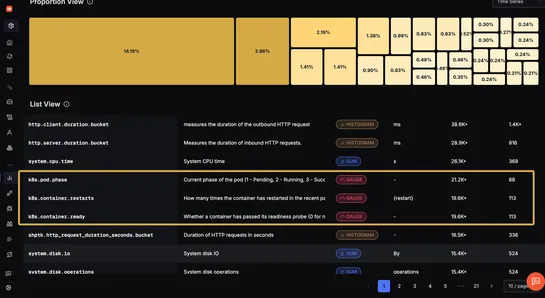

Implementing High-Performance LLM Serving on GKE: An Inference Gateway Walkthrough

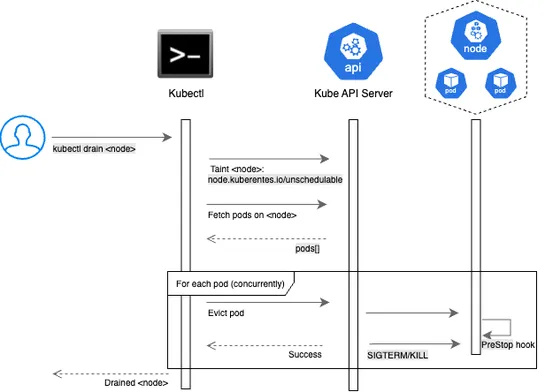

GKE Inference Gatewayflips LLM serving on its head. It’s all about that GPU-aware smart routing. By juggling the KV Cache in real time, it amps up throughput and slices latency like a hot knife through butter... read more