Driving Content Delivery Efficiency Through Classifying Cache Misses

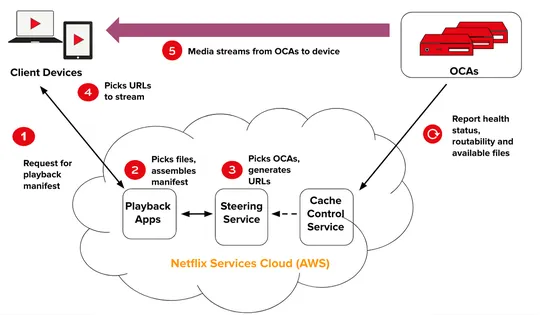

Netflix’sOpen Connectprogram rewires the streaming game. EnterOpen Connect Appliances (OCAs): these local units demolish latency, curbcache misses, and pump up streaming power. How? By magnetizing servers withnetwork proximitywizardry. Meanwhile,Kafkarolls up its sleeves, juggling low-latency logs l.. read more