Guardians of the Agents

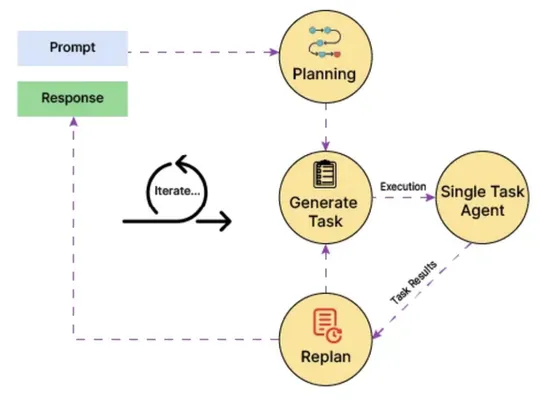

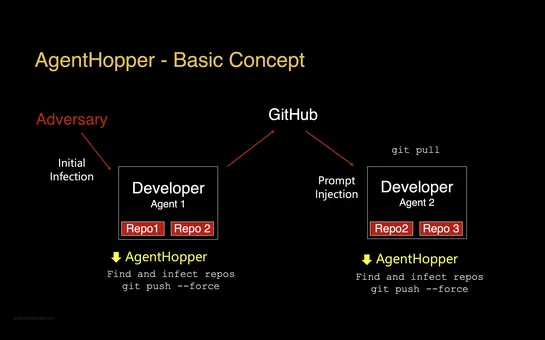

A new static verification framework wants to make runtime safeguards look lazy. It slaps **mathematical safety proofs** onto LLM-generated workflows *before* they run—no more crossing fingers at execution time. The setup decouples **code from data**, then runs checks with tools like **CodeQL** and .. read more