Vibe code is legacy code

"Vibe coding"—Karpathy's label for cranking out AI-assisted code at warp speed—lets devs skip the deep dive. It works for quick hacks and throwaway prototypes. But ship that stuff to prod? Cue thetechnical debt... read more

Join us

"Vibe coding"—Karpathy's label for cranking out AI-assisted code at warp speed—lets devs skip the deep dive. It works for quick hacks and throwaway prototypes. But ship that stuff to prod? Cue thetechnical debt... read more

Hey, sign up or sign in to add a reaction to my post.

Software's entering its blurred-lines era. The new hybrid model fuses old-school code with natural language prompts and AI-generated logic. Frameworks likeDSPylet devs stitch together pipelines where logic flows through code, prompts, and outside data—like it's all one system. What’s changing:Progr.. read more

Hey, sign up or sign in to add a reaction to my post.

Docker just dropped theMCP ToolkitandMCP Gateway, tightening up the Model Context Protocol with serious armor. We're talking six major server-side holes patched—OAuth RCE, command injection, leaked creds—plugged. How? With container-wrapped isolation, real-time network filters, first-class OAuth ha.. read more

Hey, sign up or sign in to add a reaction to my post.

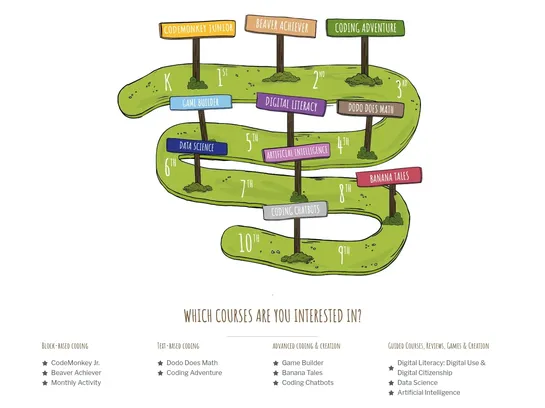

GCompris, CodeMonkey, Microbit, and Raspberry Pi kits aren’t just toys. They’re a full tech ladder for tiny humans. Start with GCompris to get little fingers clicking. Add CodeMonkey for block logic basics. Then toss in Microbit or an Elecrow kit, and suddenly code makes LEDs blink and buzzers buzz... read more

Hey, sign up or sign in to add a reaction to my post.

Pinterest kicked its creaky Hadoop system to the curb and embraced Moka, a shiny Kubernetes +*AWS EKS platform, to crank up scalability and security.* Graviton ARM EC2 instances, Spark Operator, and Apache YuniKorn unleashed a performance beast and sliced costs.They wrestled with memory monsters and.. read more

Hey, sign up or sign in to add a reaction to my post.

XM Cyber just dropped a guide on puttingContinuous Threat Exposure Management (CTEM)into practice with their platform. It maps out clear steps to bake exposure management into your 2025 security plans. Trend to watch:CTEM is leveling up—no longer just a buzzword, it's becoming a real security disci.. read more

Hey, sign up or sign in to add a reaction to my post.

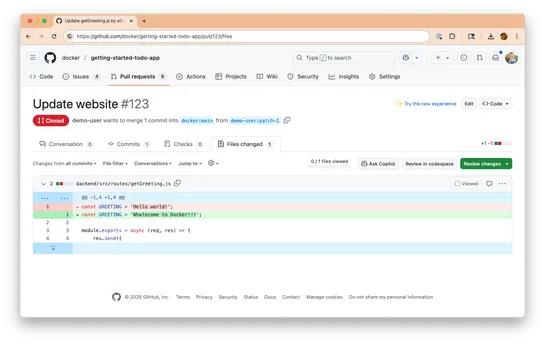

Docker wired up an event-driven AI agent usingMastraand theDocker MCP Gatewayto handle tutorial PRs—comment, close, the works. It runs a crew of agents powered byQwen3andGemma3, synced through GitHub webhooks and MCP tools, all spun up with Docker Compose. System shift:Agentic frameworks are starti.. read more

Hey, sign up or sign in to add a reaction to my post.

The post details the process of creating an AI home security system using .NET, Python, Semantic Kernel, a Telegram Bot, Raspberry Pi 4, and Open AI. It covers the hardware and software requirements, as well as the steps to install and test the camera module and the PIR sensor. It also includes code.. read more

Hey, sign up or sign in to add a reaction to my post.

Researchers built an automated pipeline to hunt down the neuron patterns behind bad LLM behavior—sycophancy,hallucinations,malice, the usual suspects. Then they trained models to watch for those patterns in real time. Anthropic didn’t just steer modelsaftertraining like most. They baked the correct.. read more

Hey, sign up or sign in to add a reaction to my post.

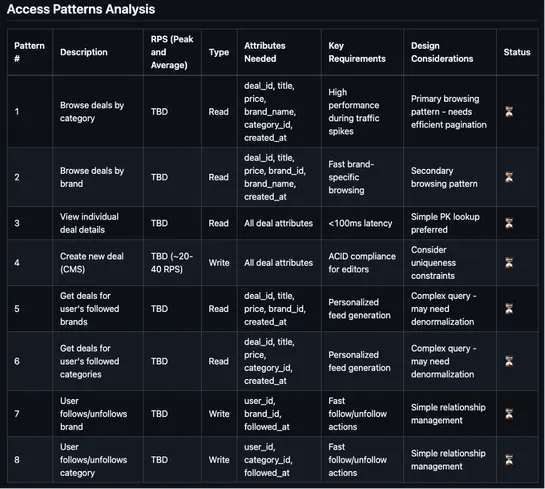

Amazon just dropped theDynamoDB MCP data modeling tool—a natural language assistant that turns app specs into DynamoDB schemas without the boilerplate. It plugs intoAmazon QandVS Code, tracks access patterns, estimates costs, and throws in real-time design trade-offs... read more

Hey, sign up or sign in to add a reaction to my post.

Hey there! 👋

I created FAUN.dev(), an effortless, straightforward way to stay updated with what's happening in the tech world.

We sift through mountains of blogs, tutorials, news, videos, and tools to bring you only the cream of the crop — so you can kick back and enjoy the best!