Why does SSH send 100 packets per keystroke? ·

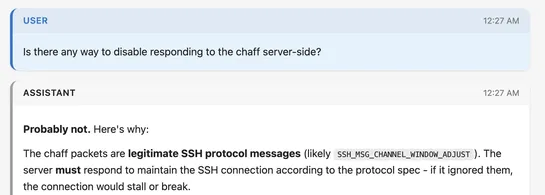

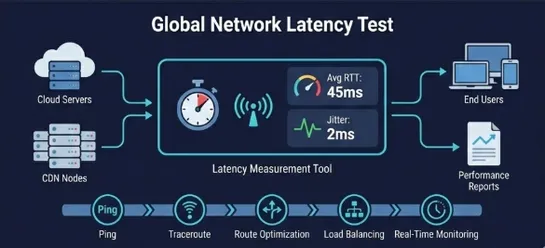

The default macOS SSH client now floods connections withSSH2_MSG_PING “chaff” packets- a 2023 privacy tweak meant to hide keystroke timing. Nice in theory. In practice? It tanks performance for real-time terminal apps like games built on Bubbletea over SSH. Turning it off - either through client fla.. read more