There Are Kernel Bugs in Your System That Won’t Be Found for 20 Years

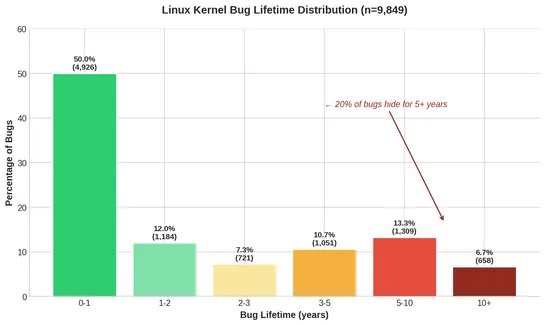

The analysis of Linux kernel bugs shows that some bugs remain undiscovered for over 20 years, with an average lifespan of 2.1 years. The study examined 125,183 bug-fix pairs and found that certain subsystems have longer bug lifetimes. A tool developed for the research identified 92% of historical bugs, and findings indicate that bug discovery has improved over time.