Meet the ‘Mad Max’-Loving CEO Challenging Nvidia With a Renegade Chip

June Paik spurned a takeover offer from Meta Platforms last year. Now his South Korean company, FuriosaAI, has an AI chip entering mass production... read more

June Paik spurned a takeover offer from Meta Platforms last year. Now his South Korean company, FuriosaAI, has an AI chip entering mass production... read more

A long AWS smackdown in US-EAST-1 traced back to a ticking time bomb inDynamoDB’s automated DNS system. The flaw torpedoed EC2 networking, hobbled Lambda and Fargate, and dragged down theNetwork Load Balancer. Endpoints ghosted. Configs stalled. Everything snowballed. AWS says they’ll upgrade EC2 th.. read more

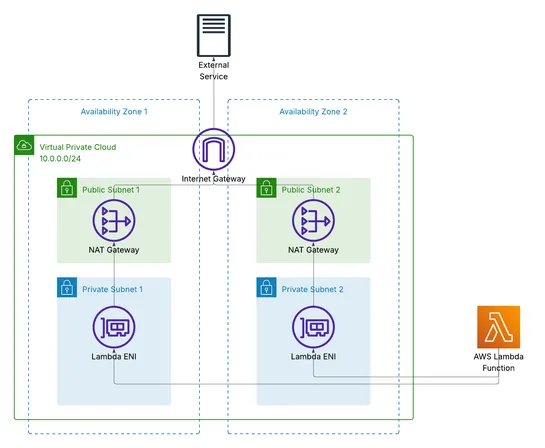

AWS just made a big dent in NAT gateway bills. You can now runLambda in VPCs with IPv6 and an egress-only Internet gateway- no more always-on NAT draining your wallet. Keep the private subnets locked down. Still get outbound Internet access. IPv6 handles the traffic, slicing out the NAT middleman... read more

A former NASA engineer - now a Google Cloud AI infra alum - rips apart the idea of building GPU datacenters in orbit. His verdict: space is a terrible server rack. Power delivery? A nightmare. Heat dissipation? Worse in a vacuum. Radiation? Frying time. Even a 200kW solar rig (think ISS-sized) could.. read more

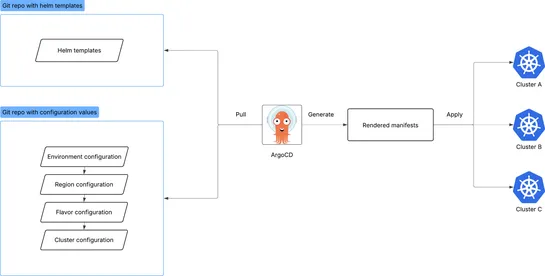

Monday.com ditched ArgoCD's built-in manifest diffing. Instead, they wired up a custom CI renderer that pre-renders Helm charts using real cluster data. Then it compares the desired states across Git branches. The kicker: diffs go to a UI with custom grouping support. Reviews get easier. New devs ge.. read more

TravelEase Inc., a growing travel company, significantly improved customer inquiries handling by replacing a basic mailto: link with a modular, serverless, cloud-native system managed with Terraform. This new system automated message validation, processing, storage, and notifications using Lambda fu.. read more

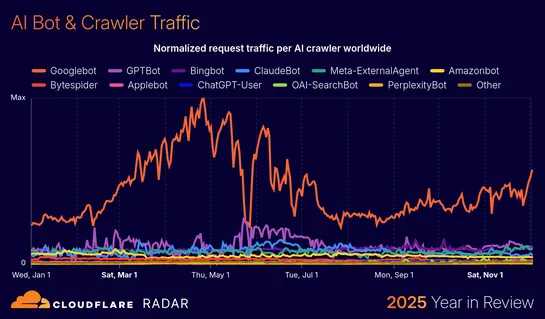

In 2025, Internet growth was driven less by humans and more by AI, with AI crawling and user-triggered access surging while post-quantum encryption secured over half of human web traffic. Security risks intensified as record-breaking DDoS attacks topped 30 Tbps and government-imposed shutdowns accounted for nearly half of major global outages.