TL;DR

Cline-bench launches to offer realistic AI coding benchmarks, pledging $1M to support open source maintainers and enhance research with real-world challenges.

Key Points

Highlight key points with color coding based on sentiment (positive, neutral, negative).Cline-bench is an open source benchmark initiative designed to create realistic reinforcement learning environments based on real-world open source development work.

It aims to address the inadequacy of current coding benchmarks, which often rely on simplistic tasks, by offering research-grade environments that reflect true engineering challenges.

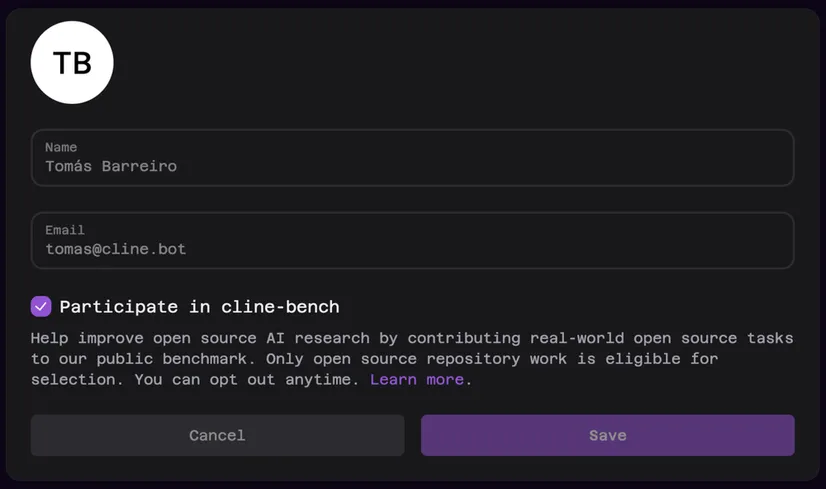

Engineers and developers can contribute by using the Cline Provider on open source projects, where challenging tasks that require manual intervention become candidates for inclusion.

Only open source repositories are eligible to ensure transparency and reproducibility.

A $1M sponsorship program supports developers who contribute real-world tasks, offering Cline Open Source Builder Credits to enhance their workflow.

Cline-bench is stirring the pot in the coding benchmark scene. This open-source project is on a mission to create reinforcement learning environments that truly reflect the real-world hurdles developers face. Unlike the typical benchmarks that lean on artificial tasks, according to Cline - cline-bench is diving headfirst into the nitty-gritty of actual engineering work. And to sweeten the deal, they've put up a cool $1 million to support open-source maintainers.

What really makes cline-bench stand out is - according to it creators - its focus on genuine engineering tasks rather than synthetic puzzles. The environments it offers are crafted to mirror real engineering constraints, complete with repository starting snapshots and authentic problem definitions. It's all about capturing the essence of real-world coding challenges. Plus, these environments are reproducible and stick to modern open-source standards, making them a solid choice for researchers seeking reliable evaluations.

The tasks in cline-bench aren't just plucked from thin air. They're drawn from real open-source work, especially those tricky tasks where models need a human touch or just can't cut it. Only open-source repositories make the cut, ensuring transparency and open scientific progress. Private repositories? They're not part of the deal, as the initiative is all about openness and reproducibility.

Developers can jump into the cline-bench action by using the Cline Provider on open-source projects or by manually submitting tasks from open-source repositories. The initiative is calling on those who tackle challenging real-world problems to contribute, as these tasks are perfect for cline-bench. The ultimate aim? To provide reliable model evaluations, promote open scientific progress, and offer training data for further research. It's all about making coding agents more reliable and effective in real engineering settings.

Key Numbers

Present key numerics and statistics in a minimalist format.The amount committed to support open source maintainers contributing to the cline-bench initiative.

Stakeholder Relationships

An interactive diagram mapping entities directly or indirectly involved in this news. Drag nodes to rearrange them and see relationship details.Organizations

Key entities and stakeholders, categorized for clarity: people, organizations, tools, events, regulatory bodies, and industries.Supports and uses cline-bench to advance the frontier of coding capabilities.

Involved in the cline-bench initiative to enhance AI research with practical evaluations.

Participates in the cline-bench initiative to push the boundaries of autonomous software development.

Timeline of Events

Timeline of key events and milestones.An open source benchmark initiative aimed at creating realistic reinforcement learning environments based on real-world open source development work.

A program to support developers contributing real-world tasks to cline-bench.

Plans to publish contribution guidelines, environment structure documentation, and an early tranche of open source cline-bench tasks.

Additional Resources

Enjoyed it?

Get weekly updates delivered straight to your inbox, it only takes 3 seconds!Subscribe to our weekly newsletter Kala to receive similar updates for free!

Give a Pawfive to this post!

Start writing about what excites you in tech — connect with developers, grow your voice, and get rewarded.

Join other developers and claim your FAUN.dev() account now!

FAUN.dev()

FAUN.dev() is a developer-first platform built with a simple goal: help engineers stay sharp withou…

Kala #GenAI

FAUN.dev()

@kalaDeveloper Influence

16

Influence

1

Total Hits

127

Posts