Machine Learning

Machine Learning is the subfield of computer science that gives computers the ability to learn without being explicitly programmed.

A machine learns from the :

- extraction of knowledge from data

- Learns from past behavior and make predictions or decisions

Basically, Machine Learning is categorized into 3 categories:

- Supervised Machine Learning

- Unsupervised machine Learning

- Reinforcement learning

and having different types of models-

- Classification

- Regression

- Clustering

- Anomaly Detection

Now let’s understand

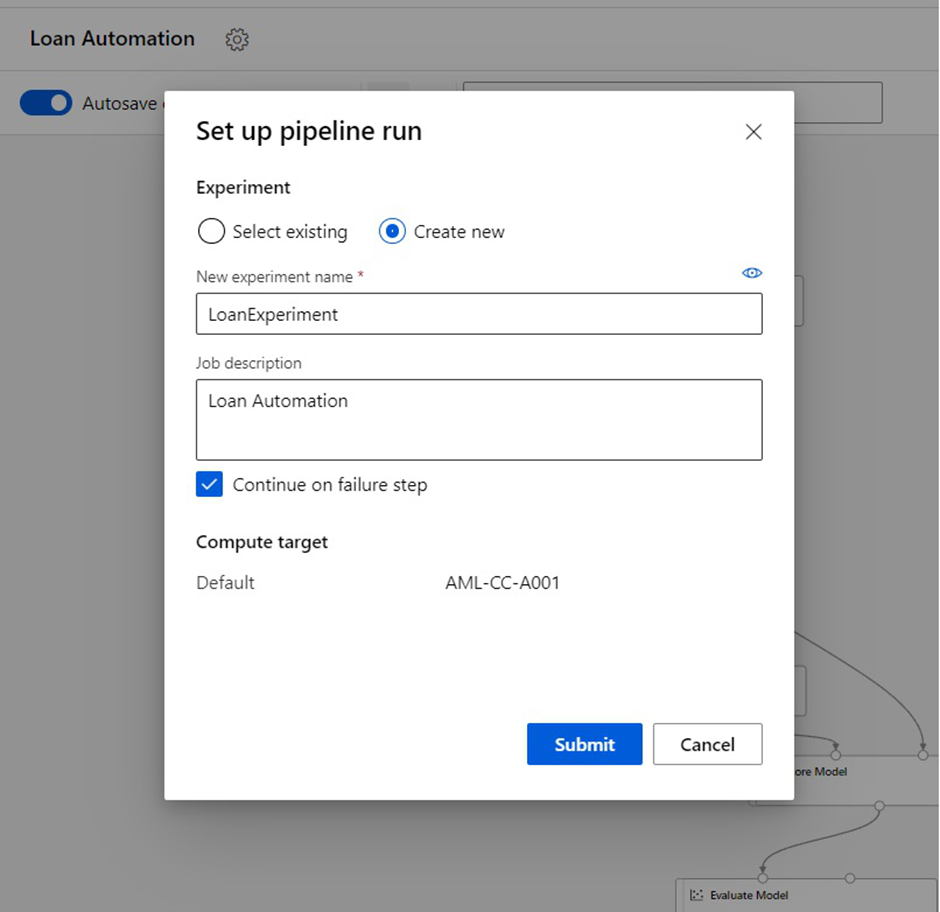

Microsoft Azure Machine Learning Service

As Microsoft Azure is the cloud service for building, testing , deploying and managing applications and services over cloud. It provides tool such as AzureML studio to create complete Machine Learning solutions in the cloud. One of the best feature of AzureML studio is that studio provides quick model creations and deployments without writing a single a line of code for basic ML experiments. It allows models to be deployed as web services. It provides a large library of Pre-Built Machine Learning algorithm and modules. It allows the developer to extend the existing created models with custom build R and Python code.

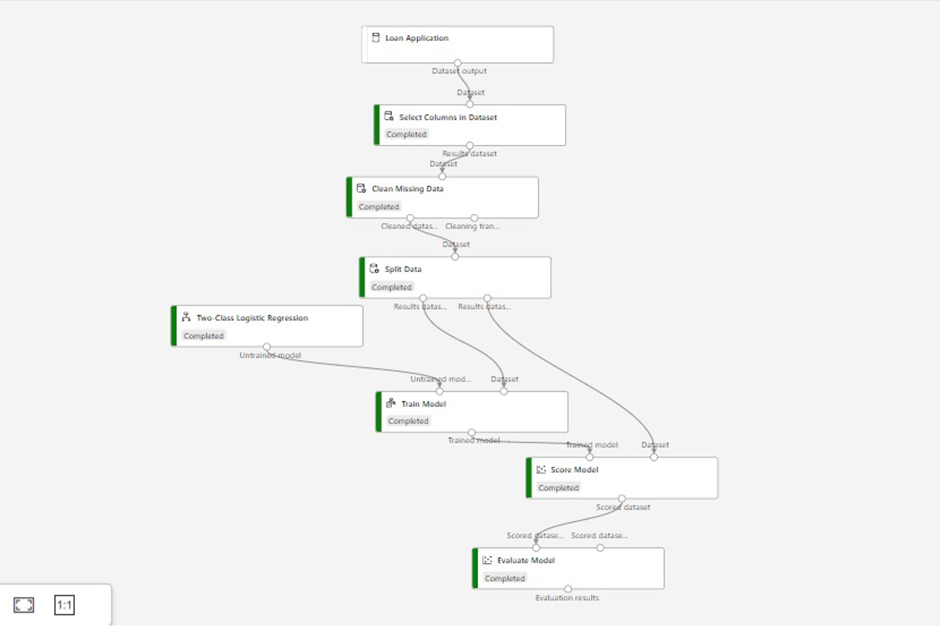

Workflow of Azure ML