Knative serverless environment helps you deploy code to Kubernetes. It gives you the flexibility on no resources are consumed until your service is not used. Basically, your code only runs when it’s consumed. Please see below official link for more details on Knative.

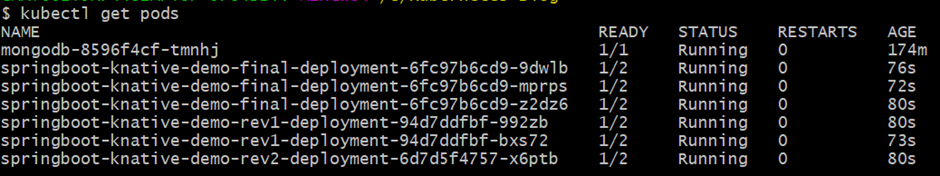

Let’s jump on to the demo so that we will get some real idea. Though you can find several samples, codes on Knative with SpringBoot and Kubernetes. But here I am trying to explain some basic and working examples of Knative with SpringBoot and Kubernetes cluster. Also, trying to touch upon a few interesting features like — ‘Scaling to Zero’, ‘Autoscaling’, ‘Revision’, ‘traffic distribution’ etc.

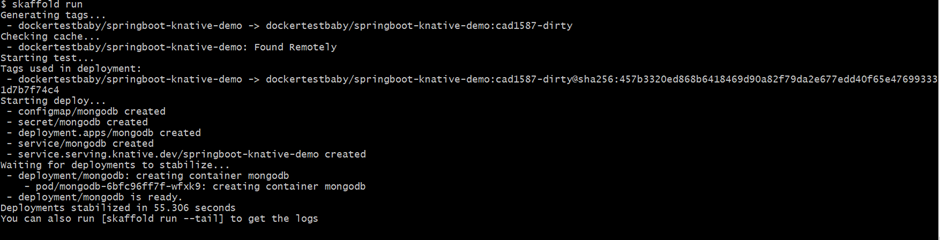

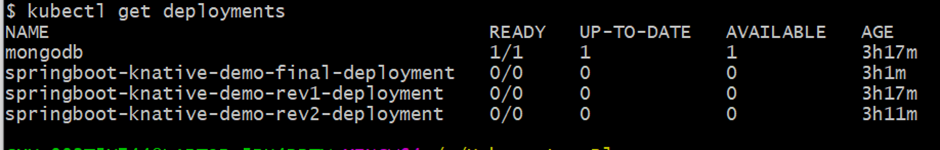

For real demo purposes, I will use a Spring Boot application that exposes REST API and connects to a mongo database. It’s a very simple application that is deployed on the Kubernetes cluster

Prerequisites:

Since I have used my laptop with Windows 10, so some prerequisites links are related to Windows 10. But some links are generic ones, you can utilize them in your Linux / Mac box. It’s not necessary that you have to use these toolsets for certain prerequisites. You can use your own toolsets as well. If you want to follow the same toolsets which I have mentioned here, then that’s also fine.

Docker Desktop:

Install Docker Desktop on Windows | Docker Documentation

Minikube:

minikube start | minikube (k8s.io)

kubectl cli:

Skaffold cli for automation:

Installing Skaffold | Skaffold

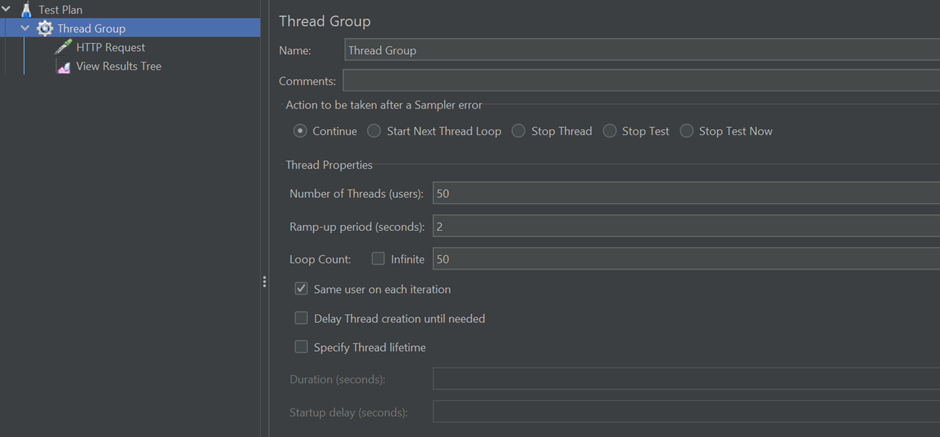

JMeter for load testing:

Apache JMeter — Apache JMeter™

Knative cli on Windows:

Installing the Knative CLI — CLI tools | Serverless | OpenShift Container Platform 4.9

Install Knative Serving on Kubernetes:

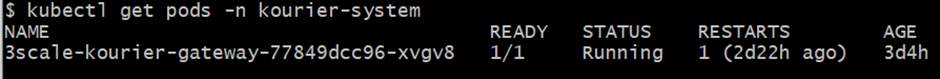

Before we start with the SpringBoot application deployed to the K8s cluster and leveraging the Knative features, let’s enable the Knative Serving component in our minikube cluster. There are various ways we can install and configure Knative Serving component in our cluster. Please see the below link for more details.

I have followed the below steps for installing Knative Serving at my end. In this article, I am only focusing on Knative Serving component.

Start Minikube: