Behind the Scenes of Chatting with Copilot

Embeddings: The Building Blocks of Contextual Understanding

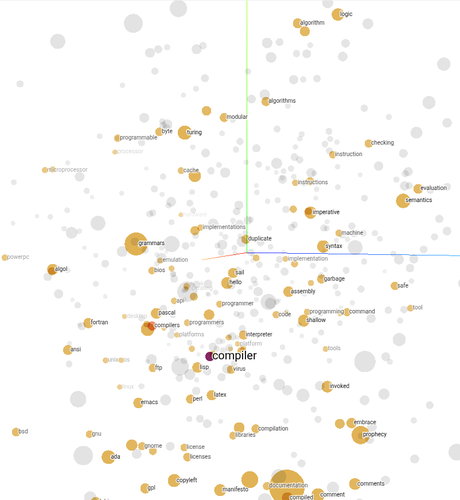

To make sense of text, the AI converts words and sentences into embeddings - mathematical vectors that capture the meaning of text. For example, the words "compiler," "code," and "programming" would end up close to each other in this vector space because they have similar meanings.

In a 3D embedding space, the word "compiler" might be represented by the coordinates (0.8, 0.6, 0.1), while "code" could be at (0.7, 0.5, 0.2), and "programming" at (0.9, 0.7, 0.3). These points are spatially close and form a cluster, which indicates their semantic similarity.

In contrast, a word like "garden" might be located at (0.1, 0.1, 0.2), far away from the programming-related terms, reflecting its different meaning.

Below is a simplified illustration of a 3D embedding space with reduced neighbors for visualization purposes:

Embedding Space

You can find an interesting interactive visualization of embeddings using TensorFlow at projector.tensorflow.org.

When you ask a question, the AI compares your request to the stored embeddings of the conversation so far. It uses cosine similarity to find the most relevant parts of the conversation and incorporates that context before generating a response. This allows it to link your current question to earlier parts of the discussion.

Building with GitHub Copilot

From Autocomplete to Autonomous AgentsEnroll now to unlock all content and receive all future updates for free.

Hurry! This limited time offer ends in:

To redeem this offer, copy the coupon code below and apply it at checkout: