Microservices Deployment Strategies: Blue/Green, Canary, and Rolling Updates

Deployment Strategies

Blue/Green Deployment: Introduction

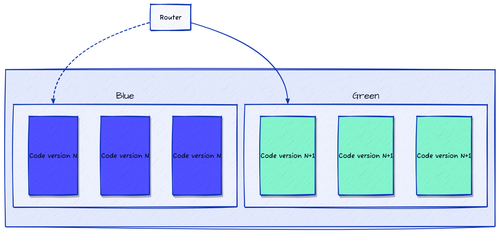

The Blue/Green deployment strategy involves running two production environments — one active (Blue) and one idle (Green). The Blue environment represents the current version of the application, while the Green environment hosts the new version you want to deploy.

A service or routing layer manages traffic between these two environments. While users continue interacting with the Blue environment, you can safely deploy and test the Green version in parallel.

Once the new version is verified, you simply switch the router (or load balancer) to send all traffic to the Green environment — achieving a zero-downtime deployment. If any issues arise, rolling back is as simple as redirecting traffic back to the Blue environment.

Blue/Green deployment

We can compare this strategy to a train switching tracks. The Blue track is the current route, while the Green track is the new one. The train (representing user traffic) continues on the Blue track while the Green track is prepared. Once ready, the person in charge switches the train to the Green track. If any issues arise, they can easily switch back to the Blue track.

In a practical scenario, imagine you have a Blue environment that is already running in production:

apiVersion: apps/v1

kind: Deployment

metadata:

name: blue-app

spec:

replicas: 3

selector:

matchLabels:

app: blue-app

template:

metadata:

labels:

app: blue-app

spec:

containers:

- name: app-container

image: myregistry/blue-app:1.0

To deploy the new version of the application, replicate the production environment, termed "Blue," to create a "Green" environment.

apiVersion: apps/v1

kind: Deployment

metadata:

name: green-app

spec:

replicas: 3

selector:

matchLabels:

app: green-app

template:

metadata:

labels:

app: green-app

spec:

containers:

- name: app-container

image: myregistry/green-app:2.0

Once the Green environment has the new version of the application, test it. If the testing is successful, switch your router to target the Green environment. If any issues are detected, roll back to the Blue version.

Several tools can automate or enhance Blue/Green workflows. Here are some examples:

- Istio – A service mesh that adds traffic management, observability, and security and is ideal for progressive rollouts.

- Spinnaker – A continuous delivery platform that supports advanced deployment strategies across multiple clouds.

- Jenkins – A flexible automation server capable of orchestrating Blue/Green pipelines.

- AWS CodeDeploy – A managed service that automates Blue/Green deployments to EC2, Fargate, Lambda, and on-premises servers.

- Google Cloud Deploy – A managed service for automating deployments of applications and infrastructure on Google Cloud.

The Blue/Green strategy is a popular choice for many use cases due to its simplicity and effectiveness. If done correctly, it offers major advantages, including:

- Zero downtime during upgrades

- Instant rollbacks if something fails

- Safer testing in production

- Strong isolation between versions

However, it comes with trade-offs. For instance, maintaining two identical environments increases cost and complexity, and large-scale data migrations may not fit easily within this model. This strategy can be ideal for applications that demand reliability and minimal disruption - but is less suited to systems with heavy, stateful dependencies or tight cost constraints.

Canary Deployment: The Old Miners' Technique

In the 19th century, when miners ventured into coal mines, they often brought canaries with them. These birds are small and have a special capacity: they are more sensitive to toxic gases like carbon monoxide than humans. Carbon monoxide is colorless and odorless and can be deadly, so miners would use canaries as an early warning system. If the canary showed signs of distress or died, it indicated the presence of dangerous gases. Miners would then evacuate the mine immediately to go back to safety.

We are not going to harm any canaries here, but the idea of canary deployment is similar: it is a strategy to reduce the risk of introducing a new software version in production by gradually rolling out the change to a small subset of users (a "canary" group typically representing a small percentage of the total user base) before making it available to the entire user base. If the new version proves to be stable, it can be rolled out to all users. If any issues are detected, we roll back the changes quickly with minimal impact.

Canary deployment is a progressive delivery strategy that allows for controlled testing in production and quick rollback.

It can be performed manually (not recommended) or with the help of advanced tools such as Istio. We are going to see this tool in action in the next section.

Canary Deployment: A Practical Example with Istio

Istio is a Swiss Army knife for managing microservices in Kubernetes. It provides a service mesh that adds traffic management, observability, and security features to your applications without requiring changes to the application code. It's particularly useful for implementing advanced deployment strategies like canary deployments. In this section, we will walk through a practical example.

Let's start by installing Istio in our cluster. First, download the installer files by running the following command:

# Create a directory for Istio installation

mkdir -p $HOME/istio && cd $HOME/istio

# Download Istio

curl -L https://istio.io/downloadIstio | \

ISTIO_VERSION=1.27.2 \

TARGET_ARCH=$(uname -m) \

sh -

Next, add the Istio bin directory to your system PATH:

cd $HOME/istio/istio-1.27.2

export PATH=$PWD/bin:$PATH

Perform a pre-check of your environment by running the following command:

istioctl x precheck

Continue the Istio installation by executing the following command:

istioctl install --set profile=demo -y

We are using the demo profile, which is a good starting point for most deployments. You can find more information about the different profiles in the official documentation.

The command above installs Istio and creates the necessary resources in the Kubernetes cluster, including an Istio Ingress Gateway. As a result, we no longer need the NGINX Ingress Controller that was installed in the previous sections. You can remove it by running the following command (if it's not already removed):

helm delete nginx-ingress

To enable automatic injection of Envoy sidecar proxies when deploying your application later, add a namespace label to instruct Istio.

kubectl label namespace default istio-injection=enabled

Istio uses sidecar containers to augment the functionality of your main container. It scans namespaces for the istio-injection=enabled label and automatically injects the sidecar into Pods created in those namespaces.

ℹ️ Sidecar containers in Kubernetes are additional containers that run alongside the main container within a Pod. They're designed to enhance and extend the functionality of the main container without modifying its codebase. Common use cases for sidecar containers include logging, monitoring, security, and networking. For example, a sidecar container might handle log collection and forwarding, monitor application health, manage network traffic, or provide security features like authentication and encryption.

In our context, the Istio sidecar container is responsible for intercepting network traffic to and from the main container. It enables advanced service mesh features such as traffic management. Acting as a proxy, it implements the data plane functionality of Istio. The sidecar architecture enables Istio to provide powerful capabilities such as traffic routing, load balancing, circuit breaking, and telemetry without requiring changes to the application's code.

Our plan is to deploy two versions of a simple application and use Istio to route a small percentage of traffic to the new version (the canary) while the majority of traffic continues to go to the stable version.

Here is what the first version of the application looks like: it uses the gcr.io/google-samples/hello-app:1.0 image. The container is a simple web server that responds with "Hello, world!", the version number, and the hostname (Pod name):

apiVersion: apps/v1

kind: Deployment

metadata:

name: helloapp-v1

spec:

replicas: 1

selector:

matchLabels:

app: helloapp

version: v1

template:

metadata:

labels:

app: helloapp

version: v1

spec:

containers:

- name: hello-app-v1

image: gcr.io/google-samples/hello-app:1.0

imagePullPolicy: Always

ports:

- containerPort: 8080

This is the second version of the application—it uses the gcr.io/google-samples/hello-app:2.0 image:

apiVersion: apps/v1

kind: Deployment

metadata:

name: helloapp-v2

spec:

replicas: 1

selector:

matchLabels:

app: helloapp

version: v2

template:

metadata:

labels:

app: helloapp

version: v2

spec:

containers:

- name: hello-app-v2

image: gcr.io/google-samples/hello-app:2.0

imagePullPolicy: Always

ports:

- containerPort: 8080

To expose both versions of the application, we create a ClusterIP Service:

apiVersion: v1

kind: Service

metadata:

name: helloapp

spec:

selector:

app: helloapp

ports:

- protocol: TCP

port: 80

targetPort: 8080

At this stage, the Service will route traffic to both versions of the application equally. However, we want to control this traffic distribution.

Let's move to the next step and create an Istio Gateway resource. But first, we need to obtain the IP address of the Istio Ingress Gateway. We can do this by running the following script that waits until the IP address is assigned:

while true; do

export INGRESS_IP=$(

kubectl get svc -n istio-system istio-ingressgateway \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}'

)

if [ "$INGRESS_IP" == "" ] || [ -z "$INGRESS_IP" ]; then

echo "IP address is still pending. Waiting..."

sleep 10

else

echo "Ingress IP is set to $INGRESS_IP"

break

fi

done

Now, the next step defines the Gateway resource to determine how traffic enters the service mesh.

# The Gateway configures how traffic enters the service mesh.

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: istio-gateway

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "app.${INGRESS_IP}.nip.io"

ℹ️ In Istio, the role of the Gateway object is to define the hosts and ports that will be exposed outside the service mesh.

Next, we need to define a VirtualService resource and the rules for routing:

# VirtualService defines the routing rules for the application.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: helloapp

spec:

hosts:

- "app.${INGRESS_IP}.nip.io"

gateways:

- istio-gateway

http:

- route:

# We define two routes with different weights

- destination:

host: helloapp

# This label will be used to identify the version

subset: v1

# We route 90% of the traffic to version 1

weight: 90

- destination:

host: helloapp

subset: v2

# We route 10% of the traffic to version 2

weight: 10

spec.hosts: This field specifies the hostnames that the VirtualService will respond to.spec.gateways: This field specifies the gateways that will be used to route traffic to the VirtualService.

Cloud-Native Microservices With Kubernetes - 2nd Edition

A Comprehensive Guide to Building, Scaling, Deploying, Observing, and Managing Highly-Available Microservices in KubernetesEnroll now to unlock all content and receive all future updates for free.