Deploying and Managing Services Using Rancher Manager - Part I

LoadBalancer Service, Cloud Providers, Bare Metal and ServiceLB (Klipper)

A LoadBalancer Service is a gateway that is deployed outside the cluster and acts like a single point of entry to a given application (a set of Pods). Kubernetes does not provide a built-in LoadBalancer Service; however, it provides an interface for cloud providers to implement it. To dynamically provision a cloud load balancer (like AWS ALB, GCP LB, Azure LB, etc.), the cloud provider should implement the LoadBalancer Service type. When you use a cloud provider, as soon as you create a LoadBalancer Service, an external machine is provisioned, configured, and attached to the cluster to forward traffic to your application.

Behind the scenes, when the user creates the LoadBalancer Service, K8s creates a service similar to ClusterIP in the sense that it forwards traffic to the Pods and NodePort in the sense that it opens a port on each node. Then it uses a component called the Cloud Controller Manager, and more specifically the Service Controller (a subcomponent of the Cloud Controller Manager) to interact with the cloud provider's API to create, update, and delete cloud load balancers.

ℹ️ The Cloud Controller Manager (CCM) is a Kubernetes component designed to integrate cloud-specific features and services into a Kubernetes cluster. It abstracts interactions with the cloud provider and allows Kubernetes to be agnostic of the underlying technologies. This is one of the reasons why I call Kubernetes the Cloud Operating System.

If you want to use a cloud-managed LoadBalancer Service, there's no single set of instructions that works for all cloud providers. You need to check the documentation of your cloud provider to see how to install the Cloud Controller Manager and how to integrate it with your Kubernetes cluster. In this case, creating a cluster from Rancher Manager with the cloud provider integration enabled is the easiest way to go. These are some examples:

- Setting up the Amazon Cloud Provider

- Setting up the Azure Cloud Provider

- Setting up the GCE Cloud Provider

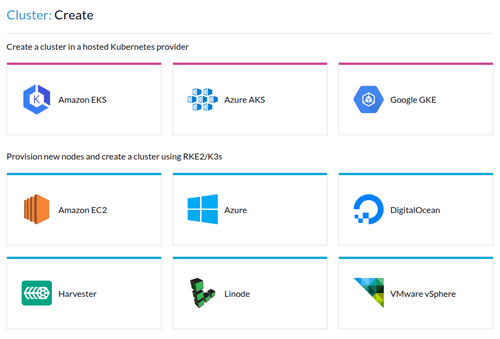

However, depending on your use case, you may not want to manage all the complexity of integrating with a cloud provider. You can directly create a cloud-managed cluster from Rancher Manager. By default, Rancher Manager integrates with AWS's EKS, Azure's AKS, and Google's GKE, but you can also enable the integration with other cloud providers like Alibaba's ACK, Oracle's OKE, Tencent's TKE, and more.

Cloud Provider Integration

For private clouds or on-premises environments, you can use Harvester on bare-metal servers as a cloud provider and integrate a service like MetalLB with your cluster.

However, there's a simpler way, in our case, to create a LoadBalancer Service without using a cloud provider: ServiceLB.

ServiceLB (Klipper)

ServiceLB, previously known as Klipper LB, is a LoadBalancer embedded in K3s that can be used to create LoadBalancer Services without the hassle of integrating with a cloud provider. Its simple design makes it easy to use, and it's a good choice for environments where you need a LoadBalancer Service.

When you create a LoadBalancer Service in Kubernetes, the ServiceLB controller deploys a DaemonSet in the kube-system namespace. This DaemonSet creates a LoadBalancer Pod (with a svclb- prefix) on each node in the cluster. These pods use hostPort to bind to the same port as the service.

ℹ️ The LoadBalancer Pods will only be deployed on nodes where the required hostPort is available. If no nodes have the port available, the LoadBalancer will remain in a Pending state.

To use this feature, you need to enable the servicelb in RKE2. Follow these steps:

# SSH into the control plane node

ssh root@$WORKLOAD_CONTROLPLANE_01_PUBLIC_IP

# Enable the servicelb feature

# (append to the RKE2 config file)

cat << EOF >> /etc/rancher/rke2/config.yaml

enable-servicelb: true

EOF

# Restart the RKE2 service

systemctl restart rke2-server

To run the ServiceLB controller on certain nodes, we need to add the svccontroller.rke2.cattle.io/enablelb=true label to the node(s) we want to run the controller on. This will turn the ServiceLB controller into allow-list mode; without it, the controller will run on all nodes. We will use the rke2-extlb-01 node as the LoadBalancer machine.

In recent versions of Rancher, labels with the

cattle.iodomain are protected and cannot be modified through the Rancher UI. You must usekubectldirectly to add these labels.

Use the following command to add the label to the node:

# SSH into the control plane node or use kubectl from your local machine

ssh root@$WORKLOAD_CONTROLPLANE_01_PUBLIC_IP

# Add the label to the external load balancer node

kubectl label node rke2-extlb-01 svccontroller.rke2.cattle.io/enablelb=true

# Verify the label was added

kubectl get node rke2-extlb-01 --show-labels | grep enablelb

Alternatively, if you prefer using the Rancher UI terminal:

- Click on

≡(hamburger menu) in the top left. - Select your workload cluster.

- Click on

kubectl Shellbutton (terminal icon) in the top right. - Run the command:

kubectl label node rke2-extlb-01 svccontroller.rke2.cattle.io/enablelb=true

End-to-End Kubernetes with Rancher, RKE2, K3s, Fleet, Longhorn, and NeuVector

The full journey from nothing to productionEnroll now to unlock all content and receive all future updates for free.

Hurry! This limited time offer ends in:

To redeem this offer, copy the coupon code below and apply it at checkout: