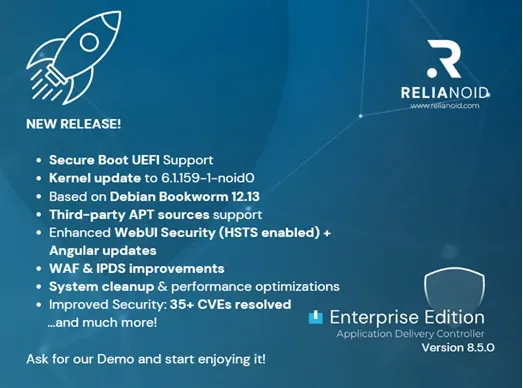

𝗥𝗘𝗟𝗜𝗔𝗡𝗢𝗜𝗗 𝟴.𝟱 𝗘𝗻𝘁𝗲𝗿𝗽𝗿𝗶𝘀𝗲 𝗘𝗱𝗶𝘁𝗶𝗼𝗻 𝗶𝘀 𝗻𝗼𝘄 𝗮𝘃𝗮𝗶𝗹𝗮𝗯𝗹𝗲!

- 𝗥𝗘𝗟𝗜𝗔𝗡𝗢𝗜𝗗 𝟴.𝟱 𝗘𝗻𝘁𝗲𝗿𝗽𝗿𝗶𝘀𝗲 𝗘𝗱𝗶𝘁𝗶𝗼𝗻 𝗶𝘀 𝗻𝗼𝘄 𝗮𝘃𝗮𝗶𝗹𝗮𝗯𝗹𝗲! This release represents a major step forward in 𝗽𝗹𝗮𝘁𝗳𝗼𝗿𝗺 𝘀𝗲𝗰𝘂𝗿𝗶𝘁𝘆, 𝘀𝘆𝘀𝘁𝗲𝗺 𝗶𝗻𝘁𝗲𝗴𝗿𝗶𝘁𝘆, 𝗮𝗻𝗱 𝗲𝗻𝘁𝗲𝗿𝗽𝗿𝗶𝘀𝗲 𝗿𝗲𝗹𝗶𝗮𝗯𝗶𝗹𝗶𝘁𝘆. - 𝗨𝗘𝗙𝗜 𝗦𝗲𝗰𝘂𝗿𝗲 𝗕𝗼𝗼𝘁 𝘀𝘂𝗽𝗽𝗼𝗿𝘁 – cryptographic verification of the boot chain - 𝗗𝗲𝗯𝗶𝗮𝗻 𝗕𝗼𝗼𝗸𝘄𝗼𝗿𝗺 𝟭𝟮.𝟭𝟯 𝗯𝗮𝘀𝗲 + 𝗞𝗲𝗿𝗻𝗲𝗹 𝟲.𝟭.𝟭𝟱𝟵-𝟭-𝗻𝗼𝗶𝗱𝟬 - 𝗛𝗦𝗧𝗦..