How to Benchmark Python Code?

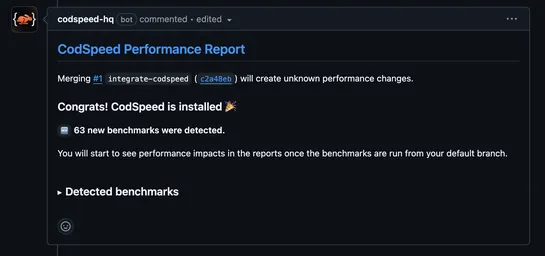

pytest-benchmarknow plugs straight intoCodSpeedfor automatic performance runs in CI - flamegraphs, metrics, and history included. Just toss a decorator on your test and it turns into a benchmark. Want to measure a slice of code more precisely? Use fixtures to zoom in... read more

![Kubernetes Tutorial For Beginners [72 Comprehensive Guides]](https://cdn.faun.dev/prod/media/public/images/kubernetes-tutorial-for-beginners.width-545.format-webp.webp)