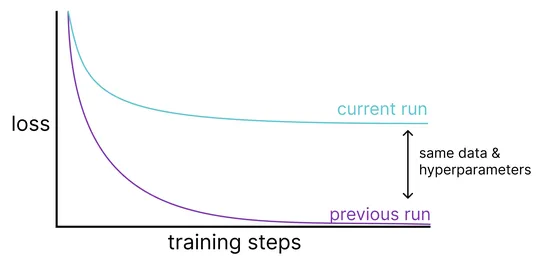

The bug that taught me more about PyTorch than years of using it

A sneaky bug inPyTorch’s MPS backendlet non-contiguous tensors silently ignore in-place ops likeaddcmul_. That’s optimizer-breaking stuff. The culprit? ThePlaceholder abstraction- meant to handle temp buffers under the hood - forgot to actually write results back to the original tensor... read more