How to build highly available Kubernetes applications with Amazon EKS Auto Mode

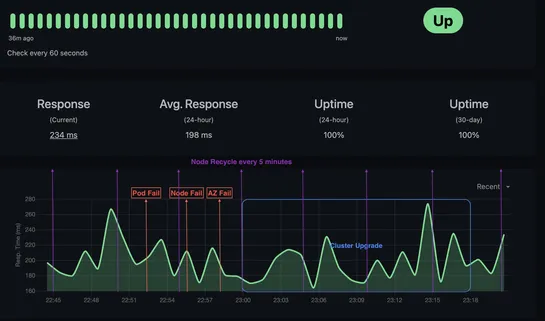

Amazon EKS Auto Mode now runs the cluster for you—handling control plane updates, add-on management, and node rotation. It sticks to Kubernetes best practices so your apps stay up through node drains, pod failures, AZ outages, and rolling upgrades. It also respectsPod Disruption Budgets,Readiness Ga.. read more