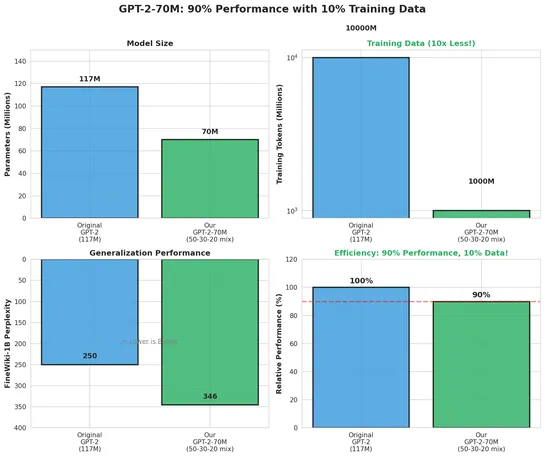

The 1 Billion Token Challenge: Finding the Perfect Pre-training Mix

Researchers squeezed GPT-2-class performance out of a model trained on just1 billion tokens- 10× less data - by dialing in a sharp dataset mix:50% finePDFs, 30% DCLM-baseline, 20% FineWeb-Edu. Static mixing beat curriculum strategies. No catastrophic forgetting. No overfitting. And it hit90%+of GPT-.. read more