You Should Write An Agent

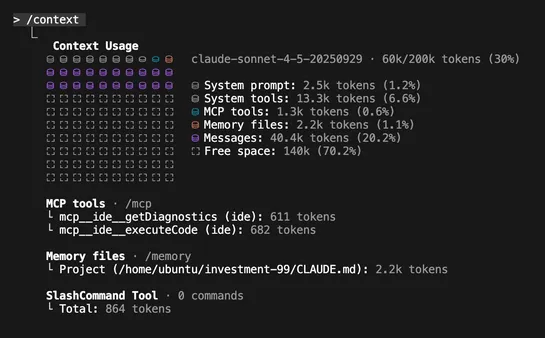

Building LLM agents - essentially looping stateless models through tools - looks simple. Until it isn't. Peel back the layers, and you hit real architectural puzzles:context engineering, agent loops, sub-agent choreography, execution constraints... read more