Why GPUs accelerate AI learning: The power of parallel math

Modern AI eats GPUs for breakfast - training, inference, all of it. Matrix ops? Parallel everything. Models like LLaMA don’t blink without a gang of H100s working overtime... read more

Join us

Modern AI eats GPUs for breakfast - training, inference, all of it. Matrix ops? Parallel everything. Models like LLaMA don’t blink without a gang of H100s working overtime... read more

Hey, sign up or sign in to add a reaction to my post.

Senior engineers are starting to spin upparallel AI coding agents- think Claude Code, Cursor, and the like - to run tasks side by side. One agent sketches boilerplate. Another tackles tests. A third refactors old junk. All at once. Is it "multitasking on steroids"? Not just this as it messes with ho.. read more

Hey, sign up or sign in to add a reaction to my post.

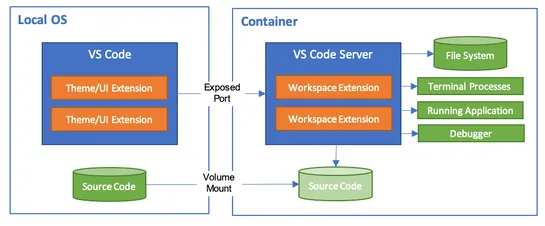

Agentic LLM apps come with a glaring security flaw: they can't tell the difference between data and code. That blind spot opens the door to prompt injection and similar attacks. The fix? Treat them like they're radioactive. Run sensitive tasks in containers. Break up agent workflows so they never ju.. read more

Hey, sign up or sign in to add a reaction to my post.

AWS’s us-east-1 faceplanted for 14 hours after arace conditioninDynamoDBkicked off a DNS meltdown, taking down 140 services. EC2 buckled under acongestive collapse, overwhelmed by a backup in DropletWorkflow Manager queues. Meanwhile, NLB health checks kept firing blanks - tricked by stale network s.. read more

Hey, sign up or sign in to add a reaction to my post.

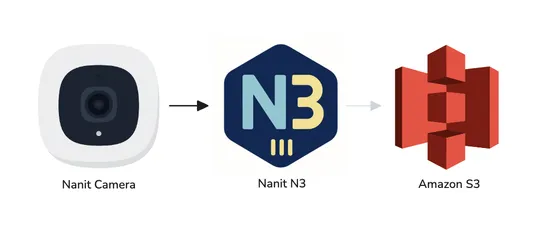

Nanit ditched S3’s PutObject-heavy ingest path and built a customRust-based in-memory landing zone (N3). It cut ~$500K/year in storage ops. N3 grabs short-lived video chunks straight into RAM and only spills to S3 when it has to. Ordering stays tight thanks toSQS FIFO, and fallback kicks in clean wh.. read more

Hey, sign up or sign in to add a reaction to my post.

A plain-oldgit repo on an SSH-accessible servercan double as a lean deployment rig. Drop in somegit hooks- like apost-receive- and every push can kick off static site builds or publish code on the spot. No extra tools. Just Git doing Git things. Turns basic Git infra into a no-frills CI/CD pipeline... read more

Hey, sign up or sign in to add a reaction to my post.

AWS has launched Project Rainier, a massive AI compute cluster with nearly half a million Trainium2 chips, in collaboration with Anthropic to advance AI infrastructure and model development.

Hey, sign up or sign in to add a reaction to my post.

Amazon apologized for a major AWS outage in the Northern Virginia region, caused by a race condition in the DynamoDB DNS management system, affecting services like DynamoDB, Network Load Balancer, and EC2.

Hey, sign up or sign in to add a reaction to my post.

In 2025, GitHub saw a surge in growth with AI advancements, as TypeScript overtook Python and JavaScript in popularity, fueled by the release of GitHub Copilot Free and a global developer expansion.

Hey, sign up or sign in to add a reaction to my post.

Hey there! 👋

I created FAUN.dev(), an effortless, straightforward way to stay updated with what's happening in the tech world.

We sift through mountains of blogs, tutorials, news, videos, and tools to bring you only the cream of the crop — so you can kick back and enjoy the best!