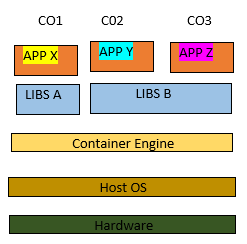

Containers are changing the way software is developed, distributed and maintained but what exactly are Containers? Containers put very simply and explicitly is a confinement of an application with its dependencies. This takes away a whole lot of hassle that comes with developing a software, now developers can build software locally, knowing it will run identically regardless of the host environments, saving them from the long old saying “but it works on my system”. Operations engineer are not left out of this benefit of encapsulation of an application and its dependencies because they can now focus on uptime, networking and resource management and thus they can spend less time configuring environments and dealing with system dependencies.

For a second depending on how familiar you are with virtualization and virtual machines, you might be thinking didn’t I just give a fancy name to virtual machines? Well at first glance, containers could be seen as lightweight versions of virtual machines since, like a VM, a container holds an isolated instance of an operating system on which we can run an application. However, containers due to their mode of operation discussed below have an edge over VMs in certain use cases that are difficult to implement or outrightly impossible with VMs.

- Containers can be started and stopped like a process i.e. in fractions of seconds. This is because containers share resources with the host thereby increasing their efficiency and since it doesn't boot up like an OS, applications running in containers incur little or no overhead compared to application running natively on the host OS.

- A production-ready distributed system can be emulated due to the light weight nature of containers thus enabling developers to run dozens of containers at the same time. The amount of containers that can be run on a single host machine exceeds the amount of VMs that can be run on that same host machine.

- For end users that need to install containerized applications, they are saved the hassle of configuration and installation issue or changes required to their systems. It also saves developers headache about differences in user environments which might prevent a smooth experience.

Making reference to the first point above, the illustration below will help simplify a fundamental difference between Containers and VMs.