Every once in a while a new paradigm shift comes along that changes or brings another approach to the way software development is done. The “new” paradigm shift I will be talking about in this article is Containers.

Containers are changing the way software is developed, distributed and maintained but what exactly are Containers? Containers put very simply and explicitly is a confinement of an application with its dependencies. This takes away a whole lot of hassle that comes with developing a software, now developers can build software locally, knowing it will run identically regardless of the host environments, saving them from the long old saying “but it works on my system”. Operations engineer are not left out of this benefit of encapsulation of an application and its dependencies because they can now focus on uptime, networking and resource management and thus they can spend less time configuring environments and dealing with system dependencies.

For a second depending on how familiar you are with virtualization and virtual machines, you might be thinking didn’t I just give a fancy name to virtual machines? Well at first glance, containers could be seen as lightweight versions of virtual machines since, like a VM, a container holds an isolated instance of an operating system on which we can run an application. However, containers due to their mode of operation discussed below have an edge over VMs in certain use cases that are difficult to implement or outrightly impossible with VMs.

- Containers can be started and stopped like a process i.e. in fractions of seconds. This is because containers share resources with the host thereby increasing their efficiency and since it doesn't boot up like an OS, applications running in containers incur little or no overhead compared to application running natively on the host OS.

- A production-ready distributed system can be emulated due to the light weight nature of containers thus enabling developers to run dozens of containers at the same time. The amount of containers that can be run on a single host machine exceeds the amount of VMs that can be run on that same host machine.

- For end users that need to install containerized applications, they are saved the hassle of configuration and installation issue or changes required to their systems. It also saves developers headache about differences in user environments which might prevent a smooth experience.

Making reference to the first point above, the illustration below will help simplify a fundamental difference between Containers and VMs.

The first illustration shows three applications running in separate VMs that lie on the same host. The hypervisor is a software required to create virtualization. Each VM created requires a full copy of the OS, the application being run and their dependencies.

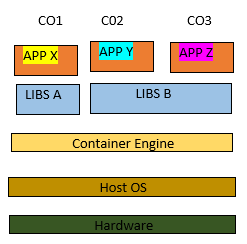

The second illustration is of a containerized system running three applications. Unlike VMs, the host kernel is shared with the running containers and by that design, containers are always constrained to running the same kernel as the host. Redundancy is also avoided here as applications Y and Z use and share the same libraries. The container engine is responsible for the starting and stopping of containers and thus the overhead associated with hypervisor execution is avoided since the processes running inside the containers are equivalent to native processes on the host.

Is the concept of containers actually new?.

The simple answer is; No, it is not. Containers are in fact an old concept used by UNIX systems and has been developed over the years by various companies; however it was Docker that brought the finishing blocks to the containerization concept by wrapping the Linux container technology and extending it as an image. The Docker platform has two distinct components:

- The Docker engine which provides an easy and fast interface for running containers.

- Docker hub: A cloud service for distributing containers. The Docker hub acts as a repository. Therefore, it is safe to say the work done by Docker as an organization did go a long way in pushing containers into mainstream IT.

The Docker philosophy is better understood using a real world scenario and this is the shipping-container metaphor. It helps explain the Docker approach and even presumably the Docker name itself.

Microservices and Monoliths.

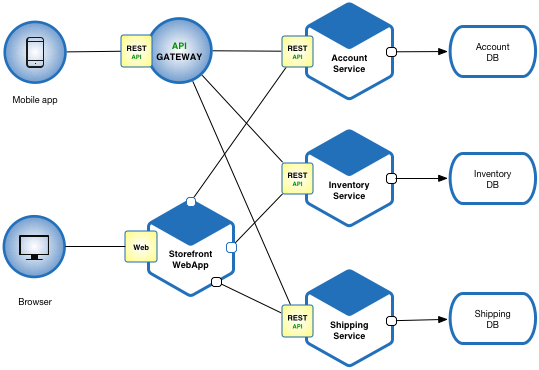

An introduction to container won’t be complete without talking about Microservices and Monoliths. There is always a reason behind the success of anything be it in tech or outside tech and the container technology is not any different. One of the biggest use cases and strongest drivers behind the uptake of containers are microservices. Microservice architecture or simply microservices, is a distinctive method of developing software systems that tries to focus on building single-function (perform a specific duty) modules with well-defined interfaces and operation. These single function modules are independent components that interact with one another over the network. This is in contrast to the traditional monolithic way of developing software where there is a single large program written in a particular language.

In dealing with extra demand, in a monolith architecture the only option for scaling is to “scale up”. Here the extra demand is handled by increasing the RAM and CPU power. On the other hand, microservices are designed to “scale out”. Here, extra demand is handled by provisioning multiple machines that the load can be spread over. I.e. load balancing. Since microservices are made up of independent components, it is possible to only scale the resources required for a particular service. In a monolith, due to it is architecture, it is scale everything or nothing resulting in wasted resources.

There is however a snag in using a microservices approach to developing software system and this stems from the independent component which as a unit is easy to understand, but as a system of interactive units (when these independent components communicate) comes with an increasing complexity.

The lightweight nature and speed of containers mean they are particularly well suited for running a microservice architecture since compared to VMs they are smaller and quicker to deploy, allowing microservices to use the minimum of resources and react quickly to changes in demand.

The software development landscape is constantly changing and containers as presented by Docker for once brought along an all-inclusive approach to the table having developers, sysops, sysadmins, etc. in mind. It was developed with a need for speed and consistency.

I will leave you with an article on Paypal’s container adoption which I personally find quite interesting.

If you do find this article informative do share, comment, and like. Thank you.

Start blogging about your favorite technologies, reach more readers and earn rewards!

Join other developers and claim your FAUN account now!

User Popularity

50

Influence

5k

Total Hits

2

Posts

Only registered users can post comments. Please, login or signup.