Can we get some Nomad Love Here?

Let’s face it — Kubernetes gets ALL the love. Although Nomad is a GREAT product, it has a much, much smaller user base. As a result, there are fewer tools geared toward supporting it.

One thing that is lacking in the Nomad ecosystem is tooling for managing Nomad deployments.

Believe me…I spent a looooong time time Googling far and wide, using search terms like “GitOps for Nomad”, “Tool like ArgoCD for Nomad”, “CD tools for Nomad”, and so on. I stumbled upon a post in this discussion which points to a list of CI/CD tools which integrate with Nomad. Unfortunately, the tools listed on there didn’t really fit the bill.

Having spent several months in late 2020/early 2021 neck-deep in Kubernetes and developing a deployment strategy around ArgoCD (I have a whole series of blog posts just on ArgoCD), I understand the importance of having a good deployment management (also known as Continuous Delivery or CD) tool for your container orchestrator. This is ESPECIALLY important when you’ve got an SRE team whose job includes overseeing the health of dozens and dozens of microservices deployed to your container orchestrator cluster(s) across multiple regions. IT. ADDS. UP. A deployment management tool like ArgoCD gives you that holistic system view, and a holistic way to manage your deployments.

At the end of the day, as far as I can tell, the only two big-ish commercial deployment management tool offerings for Nomad are:

- Spinnaker, an open-source tool which was developed at Netflix

- Waypoint, which is developed by HashiCorp

I was personally drawn to Waypoint, because, as a HashiCorp product, it means that it plays nice with other HashiCorp products. Most importantly, it can run natively on Nomad, as a Nomad job, just like ArgoCD runs natively on Kubernetes! Spinnaker, on the other hand, cannot run natively on Nomad.

Fun fact: Waypoint can also manage deployments to Kubernetes, and can run natively in Kubernetes. I think the Kubernetes features for Waypoint came out before the Nomad features.

Now, keep in mind that Waypoint is pretty new. It was first launched on October 15, 2020. The latest version of Waypoint, v0.7.0, was launched on January 13th, 2022. That’s pretty fresh.

So, is Waypoint up to snuff? Well, let’s find out, as we explore the ins and outs of Waypoint!

Objective

In today’s tutorial, we will:

- Learn how to install Waypoint on Nomad using my favourite local Hashi-in-a-box local dev environment, HashiQube.

- Learn how to use Waypoint to deploy a group of related apps to Nomad. This is very basic. I will not get into more advanced concepts like workspaces and triggers.

- Discuss Waypoint as a deployment management tool.

Assumptions

Before we move on, I am assuming that you have a basic understanding of:

- Nomad. If not, mozy on over to my Nomad intro post.

- HashiQube. It’s basically a virtualized environment that runs a bunch of Hashi tools together. I recommend that you mozy on over to my HashiQube post for more deeets.

Pre-Requisites

In order to run the example in this tutorial, you’ll need the following:

- Oracle VirtualBox (version 6.1.30 at the time of this writing)

- Vagrant (version 2.2.19 at the time of this writing)

Tutorial Repo

I will be using a Modified HashiQube Repo (fork of servian/hashiqube) for today’s tutorial.

Waypoint Installation Overview

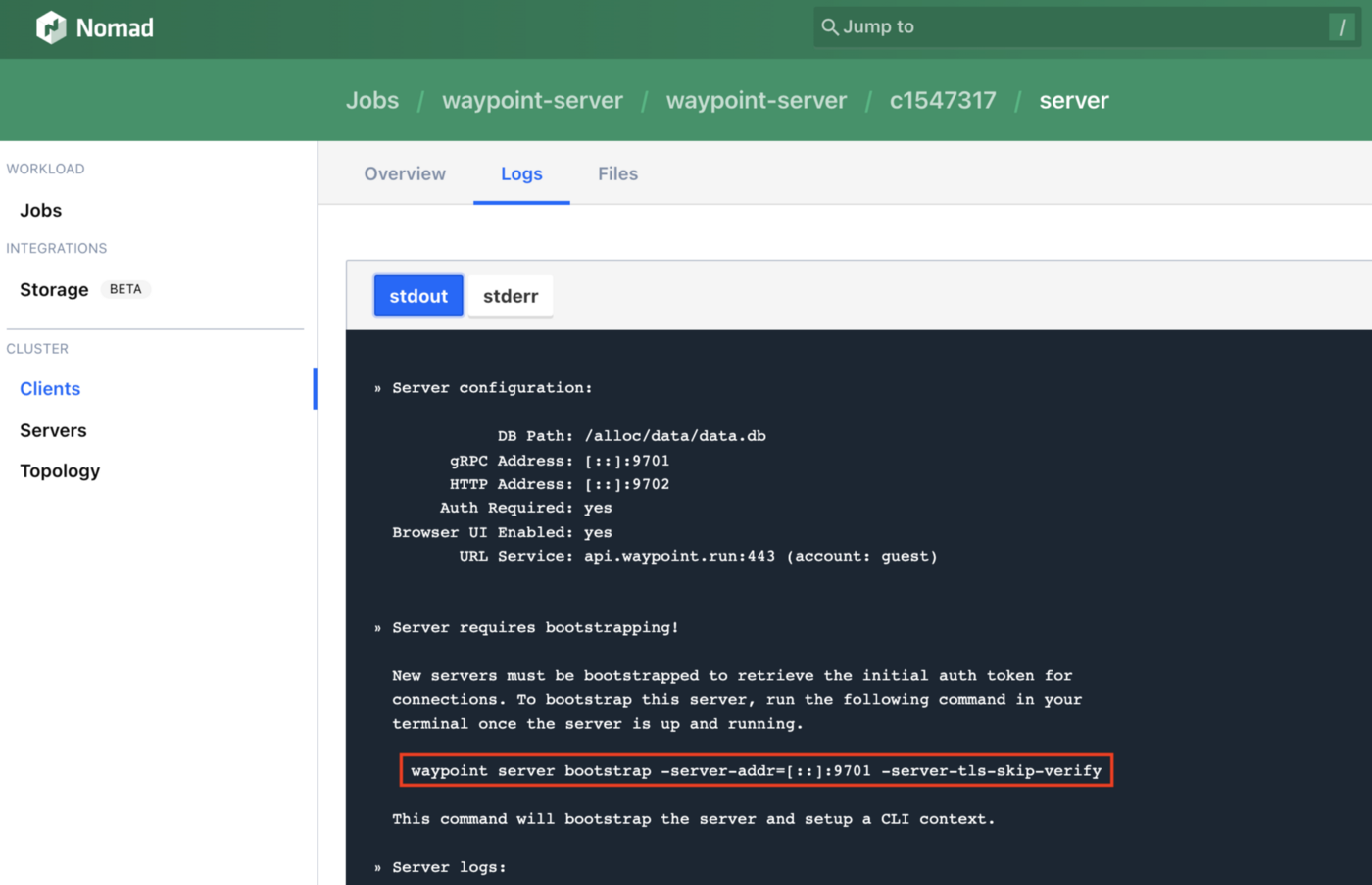

In this section, I’ll explain how to install Waypoint. But don’t worry yet about trying these steps right away, because in the next section, Running the Waypoint Example on HashiQube, you’ll get to do it as I run through the steps of booting up your HashiQube VM and deploying your app bundle to Nomad using Waypoint.

During the Vagrant VM provisioning process on HashiQube, Waypoint is installed by way of the waypoint.sh file.