As I observe, software projects mostly have nearly all the time 100% pass ratio. But I think this is not possible in reality, and we cheat ourselves. This may be due to a few reasons:

- a project was not that big, so it was not that hard to achieve 100%

- lots of failing or problematic tests were marked as skip, so they are not executed

- there was not many tests or no tests at all, i.e. tests coverage was non-existing

Generally, it is impossible to have a 100% pass ratio in more significant projects. If we look at a bug database, we find plenty of various issues. If there were tests that reveal them, then they would be failing. Maybe this is due to poor tools for tracking many tests and their results. This is an area where Kraken CI can help.

Intro

ISTQB defines several goals for testing. Two of them that are pretty important are:

- To find defects and failures thus reduce the level of risk of inadequate software quality.

- To provide sufficient information to stakeholders to allow them to make informed decisions, especially regarding the level of quality of the test object.

At first glance, these goals do not seem that hard. In small projects, it is pretty easy to achieve. Not many tests and not many code changes do not generate much work to analyze and reason.

In the case of bigger projects, where testing is performed even several times a day, and where test content contains thousands or tens of thousands of test cases and where the number of combinations of environments (like operating systems and various types of hardware) and configurations is high, tracking and monitoring tests results gets really difficult.

Proper tools must be employed to match these expectations that will automatically do much of this analytical work. Basic tests results reporting is not enough. More sophisticated analysis is required. The following sections present tools and features in Kraken CI that help in this area.

Result Changes in the Last Flow

The basic approach to testing is observing results. The results include passes, failures, errors and sometimes some other types of status. Looking at failures and errors allows for finding some problems, these lead to some debugging, root-casing, defect submission and fixing eventually.

Observing a list of results from subsequent test sessions for subsequent product builds is not easy anymore. Again, there are some failures, but which were also present in the previous build and which are new regressions? In the case of small projects with a handful of tests, it is easy to go back to previous results and compare and manually find out what has changed. If there are tens or hundreds of failures, then a feature is needed to spot regression or fixes compared to the previous build.

New button

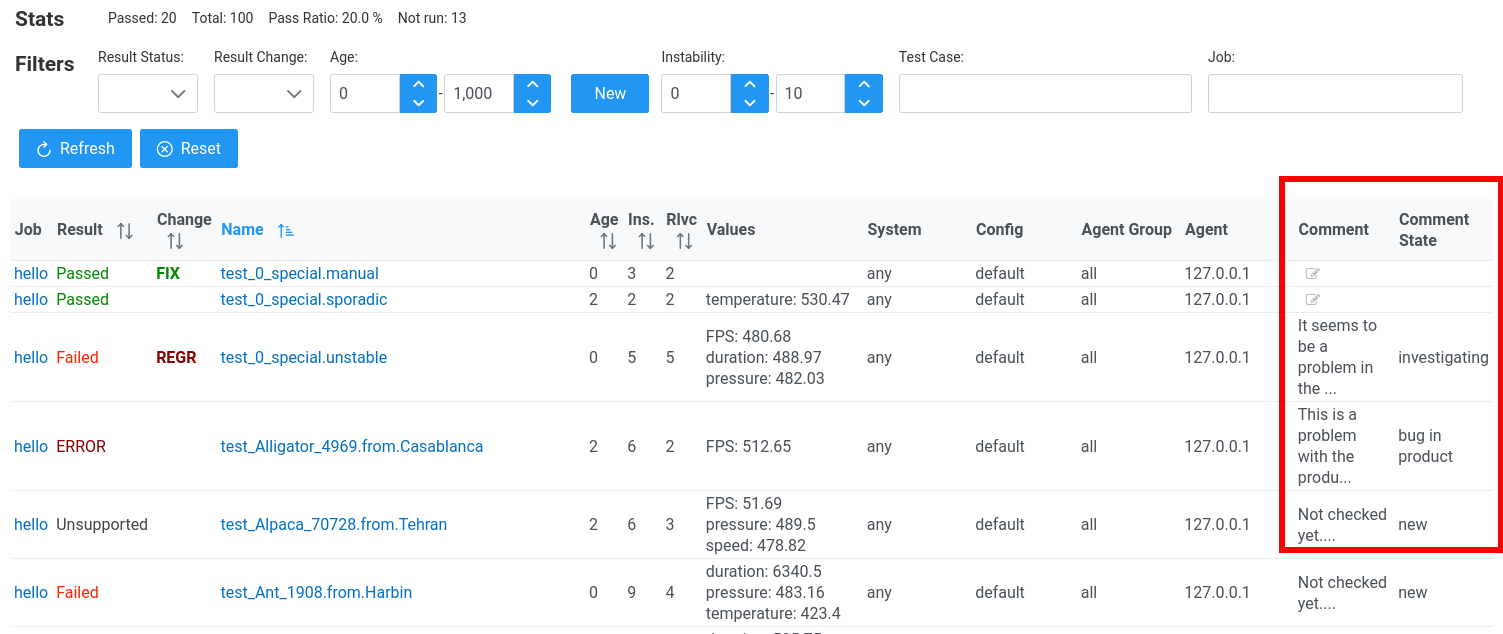

In the case of Kraken CI, the table with test case results allows immediately for filtering and showing only these results that are regressions or fixes. For that purpose, a New button shows only the test cases that changed the status in the current build compared to the previous one, i.e. shows "new", interesting results that require some attention.