Going down the rabbit hole of Postgres 18 features by Tudor Golubenco

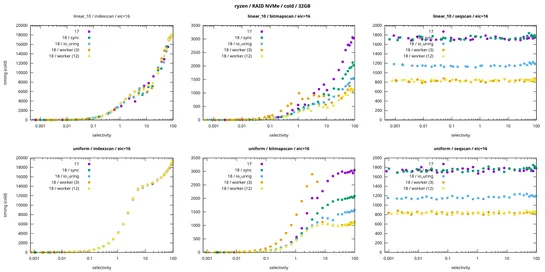

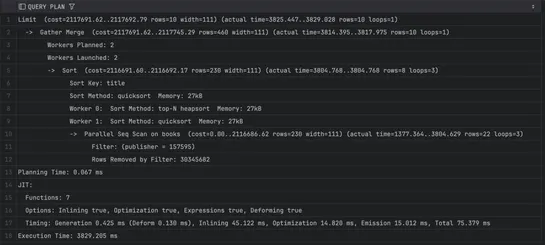

PostgreSQL 18 just hit stable. Big swing! Async IO infrastructureis in. That means lower overhead, tighter storage control, and less CPU getting chewed up by I/O. Adddirect IO, and the database starts flexing beyond traditional bottlenecks. OAuth 2.0? Native now. No hacks needed. UUIDv7? Built-in su.. read more