Google Releases Magika 1.0: AI File Detection in Rust

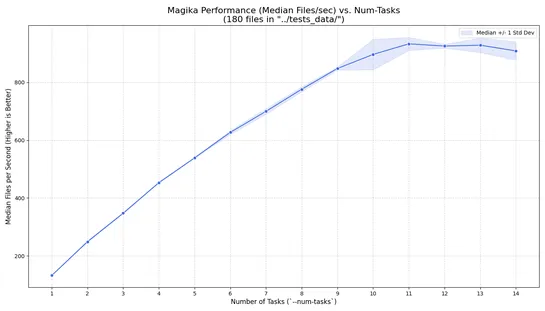

Google releases Magika 1.0, an AI file detection system rebuilt in Rust for improved performance and security.

Join us

Google releases Magika 1.0, an AI file detection system rebuilt in Rust for improved performance and security.

Hey, sign up or sign in to add a reaction to my post.

Google supports the Model Context Protocol to enhance AI interactions across its services, introducing managed servers and enterprise capabilities through Apigee.

Hey, sign up or sign in to add a reaction to my post.

AWS introduces an autonomous AI DevOps Agent to enhance incident response and system reliability, integrating with tools like Amazon CloudWatch and ServiceNow for proactive recommendations.

Hey, sign up or sign in to add a reaction to my post.

Hey there! 👋

I created FAUN.dev(), an effortless, straightforward way to stay updated with what's happening in the tech world.

We sift through mountains of blogs, tutorials, news, videos, and tools to bring you only the cream of the crop — so you can kick back and enjoy the best!