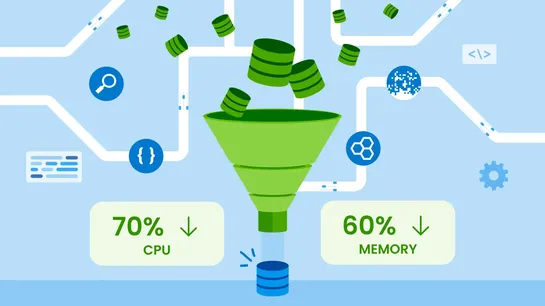

Kubernetes OptimizationInPlace Pod Resizing,ZoneAware Routin

Halodoc cut EC2 costs and shaved latency by leaning into two Kubernetes tricks: In-place pod resizing(v1.33) lets them dial pod resources up or down on the fly, especially handy during off-peak hours. Zone-aware routingviatopology-aware hintskeeps inter-service traffic close to home (same AZ), skipp.. read more