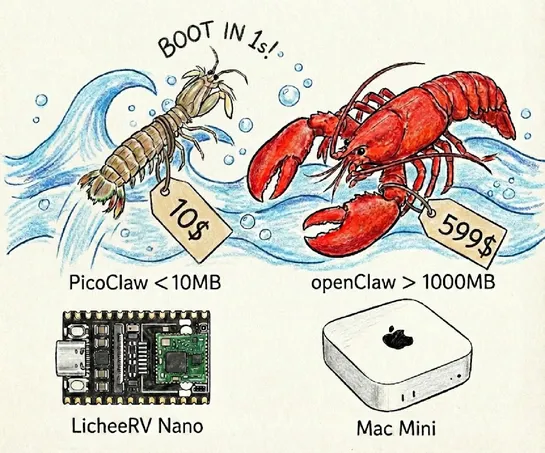

OpenClaw Lightweight Alternative Launches: A 10MB AI Assistant That Runs on $10 Hardware

Sipeed has released PicoClaw an OpenClaw micro alternative that uses 99% less memory than . , an open-source AI assistant written in Go that runs in under 10MB of RAM and boots in about one second. Designed for low-cost Linux boards starting around $10, it supports multiple LLM providers, chat platform integrations, and automation workflows. The project is MIT-licensed and available on GitHub.