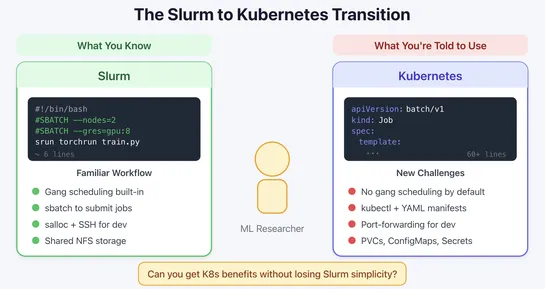

Migrating from Slurm to Kubernetes

SkyPilot drops a clean interface that blendsSlurmwithKubernetes. AI/ML teams get to keep their Slurm-style comforts - job scripts, gang scheduling, GPU guarantees, interactive workflows - but pick up Kubernetes perks like container isolation and rich ecosystem hooks. It handles the messy bits: pods,.. read more